A look at the EU vaccine passport

Posted by ekr on 20 Jul 2021

Dennis Jackson pointed me at the documents for the EU's Digital Green Certificate (DGC) vaccine passport system. At a high level, this is pretty similar to the Excelsior Pass and Vaccine Credentials Initiative systems I wrote about earlier (NYC, VCI), except with some slightly different data formats (COSE instead of JOSE/JWS[1], a new JSON structure[2] for the vaccine certificate data itself rather than reusing an existing one, etc.) In themselves, these seem like sane choices, though it's a little silly that we have multiple groups independently creating pretty isomorphic though slightly different formats to do more or less the same thing. That's the way things go sometimes, but still not great. Moreover, this system does have a number of somewhat odd features, as detailed below.

Trust Structure #

As I covered previously, a signature-based credential system needs some mechanism for the verifying app to know which signers are valid. You could in principle just bake all of the valid signers into the app, but that's not very flexible (what happens if you want to add or remove a signer?), so instead what you typically do is bake in some set of entities that you trust and allow those entities to update the list of valid signers in some fashion. For instance, in the WebPKI the way you do this is to have a set of "trust anchors", i.e., entities who are authorized to delegate the right to other entities to sign the credential. When an end-entity (e.g., a Web server) wants to authenticate it presents both its own certificate and a chain of certificates that goes back to one of the trust anchors. This allows the relying party to transitively validate the end-entity by verifying that the end-entity certificate was signed by a certificate that was signed by a certificate and so on until you get back to a trust anchor.

This is not how these vaccine passport systems seem to be designed, however, though it's actually not clear to me why. It's possible that the designers are trying keep the credentials small enough to easily fit in a bar code, but you should be able to fit things in fairly easily: the VCI uses V22 QR codes which can have 1195 characters. Even without getting fancy[3] you should be able to put together a custom ECDSA certificate format in about 128-160 bytes. Unlike VCI certs, the Digital Green Certificate spec allows for RSA-2048, which has quite large signatures, so this may be the reason, though of course allowing RSA is itself a design choice (probably the wrong one).

In any cases, for both VCI and DGC, the credential itself just contains a reference to

the key which (allegedly) signed it (in what's called a kid (key id)

and the verifying app has to obtain the key itself in some way. For instance,

in the California vaccine credential I looked at,

there was a link to a Web site containing the key and (hopefully) the verifier

app would be provisioned with a list of all of those valid URLs.[4]

DGC doesn't say how verifier apps get the keys at all. It merely

assumes that they have a list of all the Document Signing Certificates (DSC),

obtained in some unspecified fashion, presumably arranged for by the app author

(which DGC seems to assume is the national government of wherever you are).

Signing Certificate Distribution #

More interesting, perhaps, is how the app author learns the list of DSCs. The situation here is quite complicated because the DGC system assumes that both credential issuance and credential verification will be organized along national lines, but that you also want interoperability, so that, for instance, someone with the French verifier app will be able to verify credentials issued in Germany to German nationals, with each government more or less having its own policies and just telling other governments how to verify their credentials (i.e., their list of DSCs). The resulting design is... complicated:

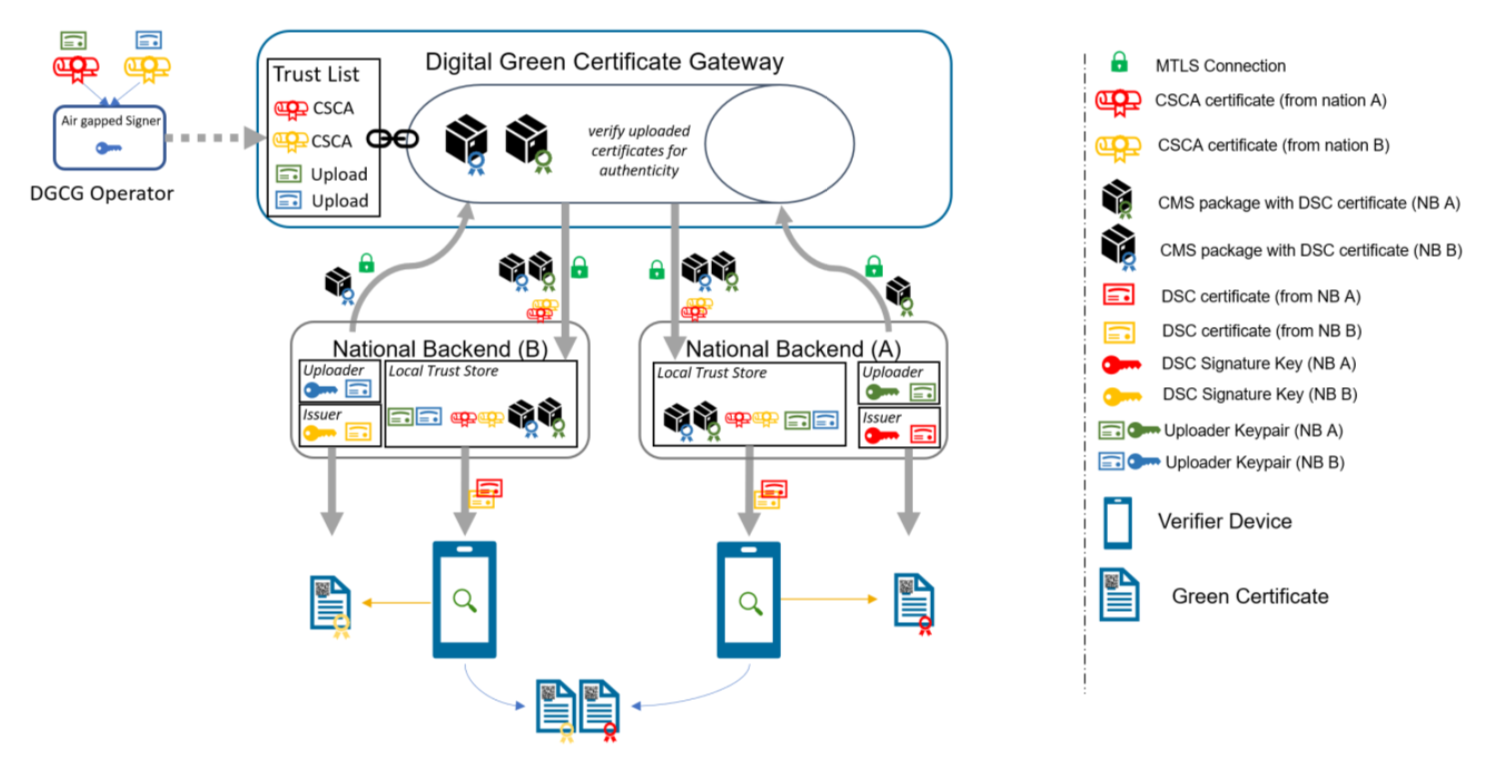

Image source: Technical Specifications for Digital Green Certificates Volume 5.

The general idea here is that each country operates their own infrastructure, complete with a verifier app, signing keys, etc. This is self-contained in the sense that if you didn't care about other countries it would all work on its own. Then there is a centralized Digital Green Certificate Gateway (DGCG) that is responsible for interchanging country's signing keys so that each country has every other country's keys.

This is all fairly reasonable -- though, as I noted in the previous section, kind of unnecessary if you're just willing to have the credentials carry their own certificate chain. The actual details are a bit odd, however. First, each country has their own Country Signing Certificate Authority (CSCA). They use their CSCA to sign Document Signing Certificates (DSCs) which are then used to sign end entity credentials (i.e., vaccine passports). So far, this is a conventional PKI.

Countries are required to upload their DSCs their DSCs to the DGCG This upload is authenticated in two separate ways:

-

The national backend authenticates with TLS authentication with one key (NB_TLS)

-

The package containing the DSCs is signed with a separate key (NB_UP).

Each national backend downloads the uploaded DSC packages from the DGCG. The DGCG also publishes a list of the NB_UP and NB_CSCA keys signed with its own key. These can be used by country B to verify the DSC package and the DSCs published by country A into the DGCG.

This all seems extremely complicated, with a number of seemingly redundant authentication mechanisms. For instance:

-

The DSCs are signed by both the CSCA and the NB_UP key. The receiving national back-end has both public keys, so why isn't one signature good enough?

-

The uploaded package is authenticated with TLS but also signed.[5] Why isn't signed enough?

Moreover, all of this signing obscures the trust relationships, which seem to ultimately go back to trusting the DGCG. The reason for this is that a receiving country obtains the list of now current NB_CSCA and NB_UP keys from the DGCG (signed by some offline DGCG key). This means that if the DGCG is compromised, it can just replace those keys with keys of its choice and thus impersonate any other country. There are a number of designs which seem like they would be a lot simpler and provide similar security properties:

-

Have the DGCG directly sign the DSCs, with the national backends acting as "registration authorities" for the DGCG (though it's possible this is undesirable for political reasons).

-

Have the DGCG just sign the CSCA certificates and then the national backends can upload new DSCs as they are minted (note that the package need not be signed because the DSCs themselves are signed.)

If you didn't want to trust the DGCG you'd need some other structure. For instance, countries could get each other's CSCA keys directly, or at least had some Certificate Transparency-type system to detect DGCG misbehavior.

Note that we (maybe) still need some way to deal with revocation, but I don't think that this system makes that dramatically easier.

Revocation #

One topic that often comes up in these designs is "revocation", i.e., signaling that a given certificate should not be trusted. This is a whole topic for WebPKI, but of course the relevance depends on the setting. I don't think it's that useful to individually revoke people's vaccine credentials on a small scale (e.g., because you discover that they didn't have an immune response or something). We all know that vaccination is imperfect, and so a bit of error here isn't the end of the world. The cases that seem more interesting are ones where we believe that a large number of credentials might have been misissued. For instance:

-

There is a compromise of one of the DSC keys.

-

We discover that a given vaccine site has been selling fake credentials (e.g., reporting that some was vaccinated but not actually vaccinating them).

It's not entirely clear to me how important these cases are. As above, we know that even if vaccination information is perfectly accurate, some people won't be protected, so it's possible that some level of fraud is tolerable. But if we're not willing to tolerate it and we do want to revoke the credentials we know were issued incorrectly, things get more complicated.

The basic issue here is the number of credentials you need to revoke. If we know that every credential issued by a given DSC is fraudulent, then we can just publish that that DSC is not to be trusted (never mind how we do that). But what if only some of those credentials are fraudulent, for instance if a lot of credentials were issued before the DSC key was compromised or a DSC was serving two sites, with only one of them committing fraud. On the Web this is sometimes handled by just revoking the CA and forcing all its customers to get new certificates, but that's not going to work well here because the vaccine credentials are static data (often printed on paper!) and so there's no real way to update them, and so invalidating a DSC also invalidates a lot of valid credentials. We quickly get to the point where you need to publish a list of all the invalid credentials (this assumes we can in fact identify them), potentially in some compressed form. In the WebPKI, this is actually somewhat challenging because there is a lot of revocation and so the size of the revocation list can get quite large. My best guess is that that won't happen here, but if it does you would presumably need to figure something out. Note that the DGC system seems to only allow for revoking DSCs, which doesn't really solve the problem for the reasons above.

Credential Loading #

Unlike the credentials issued by VCI, you can't just load the digital green certificate onto your phone. Instead, there is a "2FA" process involving a special code called a TAN (it's not clear to me what this is an acronym for). The idea here is that the credential is provided in a printed out QR code along with the TAN (provided via SMS or e-mail or something) and that (1) you need the TAN in order to load the credential onto the phone and (2) the TAN is invalidated once it's used in order to prevent the credential from being loaded onto two separate phones.

Here's what the spec says (Section 5.2):

TAN validation is an easy matter—upon scanning a DGC, the wallet app creates a cryptographic key pair. Then, the TAN and the DGCI are signed with the newly created private key and uploaded together with the corresponding public key. The certificate backend checks the signature and verifies whether

- The DGCI exists

- There haven’t been more than the specified number of TAN validation requests

- The submitted TAN corresponds to the TAN stored together with the DGCI

- The stored TAN is not expired

If all these points are positively answered, TAN validation has been successful, the user’s public key is stored together with the DGCI, and the corresponding DGC is marked as “registered” (meaning it can’t be registered again—a digitized Green Certificate can’t be digitized by any other wallet app). Otherwise, an appropriate error code is returned.

It's pretty hard to understand what's going on here. Superficially, the idea seems to be to ensure that the DGC is bound to the phone of the user (bootstrapped using the TAN) and that it can't be replayed by another user, by registering the public key generated on the initial import, but in order to make that work, you would need credential verifiers to actually verify that the person in front of them had the corresponding private key, and that's not what happens. Instead, the official wallet just refuses to load the credential if the TAN doesn't match. However, nothing stops me from writing an unofficial wallet which loads any credential it sees (e.g., because it's also a verifier app). If you actually wanted to prevent this kind of replay, you would need the verifier to have public key that the user registered and then force the user's app to prove knowledge of the corresponding public key, for instance by signing something,[6] but this is inconsistent with having a paper-based credential. And of course as soon as you allow paper-based credentials -- which can be copied indefinitely -- there isn't much point in restricting digital copying.

Moreover, none of this is necessary because the credentials are tied to a user's identity and you have to present some sort of biometric ID to prove it's really you. This means that it's not a problem to allow copying of the credential, which really only exists to prove that someone with your name was vaccinated (see here for more on this).

Summary #

None of the stuff I've listed above is really fatal; and as described the system should probably work OK. It's just a bunch of extra complexity that doesn't seem to do much in particular. As I said at the beginning, it's kind of unfortunate that we have all these independent groups building these systems: this tends to lead to a bunch of somewhat similar and yet different designs that each have their own idiosyncrasies and none of which has gotten the scrutiny it really needs. Maybe eventually we'll see an attempt at a common protocol, though of course by then it's likely that people will be attached to those idiosyncrasies and so we'll get a system that's more like a merger of all the designs than a single design that picks the best features of each.

Acknowledgement: Thanks to Dennis Jackson for pointing out some of the issues here, especially the ones about loading the passport onto the phone. Mistakes are mine.

For the uninitiated, there are at least four standard object security mechanisms. The general situation here is that every few years a new generic format for serializing structured data comes along (e.g., ASN.1/BER, XML, etc.) and naturally people want to send around data that's been encoded in that format. But they also want to sign and encrypt that data, and that signing and encryption requires its own formatting to carry metadata like key identifiers, signatures, wrapped keys, and the like. Naturally, people don't want to lug around two serialization formats and so now there's a need to invent a new secure object format that uses the new serialization format. Hence, we have CMS (in ASN.1/BER), XMLDSIG (in XML, a W3C spec this time), JOSE (in JSON), and COSE (in CBOR), plus the ones that are tied to some conceptual application like OpenPGP and HTTP object encryption. Of course, all of these are different, reflecting the prevailing design sensibilities of the time and the hope that this time we'd get it right or at least not bungle it so badly (full disclosure: I was part of the early JOSE effort, but checked out later on). Mercifully, COSE was defined shortly after JOSE and so is mostly a port of the JOSE structures -- for good or ill -- into CBOR. ↩︎

Remember what I said a minute ago about not wanting to use two serialization formats together? Well, that's what we have here. I have no idea why the EU decided to use COSE/CWT instead of JOSE/JWT. ↩︎

In this case, "fancy" would mean something like BLS which is both smaller and allows for aggregated signatures in which you can compress multiple signatures into the size of one. ↩︎

Note that this implicitly trusts the WebPKI because anyone who can impersonate the Web site can just substitute their own key. ↩︎

Incidentally, with CMS, so here we have a system that has three separate serialization formats: ASN.1/BER, COSE, and JSON. ↩︎

Note that you wouldn't require that the TAN be single use; you just need to ensure that only the rightful user could register, not that they can't register multiple times. And of course because the user has to be assumed to control their app, they can just copy their private key around. ↩︎