I automatically generated minutes for five years of IETF meetings

using AI, of course

Posted by ekr on 31 Dec 2025

It is characteristic of all committee discussions and decisions that every member has a vivid recollection of them and that every member’s recollection of them differs violently from every other member’s recollection. Consequently, we accept the convention that the official decisions are those and only those which have been officially recorded in the minutes by the officials. —Sir Humphrey Appleby, "Yes, Prime Minister": S2E1.

Motivation #

Like other standards development organizations (SDOs), the IETF requires that meetings be minuted:

All working group sessions (including those held outside of the IETF meetings) shall be reported by making minutes available. These minutes should include the agenda for the session, an account of the discussion including any decisions made, and a list of attendees. The Working Group Chair is responsible for insuring that session minutes are written and distributed, though the actual task may be performed by someone designated by the Working Group Chair. The minutes shall be submitted in printable ASCII text for publication in the IETF Proceedings, and for posting in the IETF Directories and are to be sent to: [email protected]

The IETF doesn't have any professional staff support for taking minutes, which means that the working group members have to record the minutes. The outcome of this is predictably bad:

- The minutes are bad:

- Taking good minutes is hard work and nobody at IETF is really trained to do it. It's easy for people to transcribe events incorrectly, miss important events, etc.

- Taking minutes interferes with WG participation.

- Taking minutes makes it hard to participate in the WG, partly because you're too busy writing stuff down to think about what to say and partly because you can't easily minute yourself, so either someone has to take over while you participate or you end up with a gap in the minutes.

- People avoid taking minutes.

- Because minute taking isn't fun, it's hard to get volunteers. The IETF doesn't have a system for drafting people to do the job, so instead what happens is that the WG chairs find themselves begging for people to step forward and take minutes at the beginning of each WG meeting ("we can't start without a minute taker") until eventually some poor sucker grudgingly agrees to do it.

A Technical Fix #

This post is actually two stories in one:

- My attempt to produce a technical fix for the minutes problem.

- Some reflections on using AI as a tool to produce that technical fix.

What connects these two threads is that this project would have been a lot of work a few years ago, but now it's basically trivial.

Over the past 10+ years, there have been some modest improvements which have made things a little easier:

-

It's now standard practice to take minutes in a shared notepad, which makes it possible for multiple people to take minutes, or for someone to fill in a bit when the main minute taker wants to participate. Nevertheless, as mentioned above, taking minutes is not a popular activity.

-

The IETF now makes complete video and audio recordings of every WG meeting, complete with automated transcripts. Many of the people I know find the minutes so unreliable, they just go back to the video whenever they want to know what happened.

I'm a long-time minutes-taking evader but I'm also a strong believer in recognizing when people don't like doing some things and trying to find ways to stop them from having to do them. At a recent interim meeting in Zurich for the AIPREF WG, some of us got so frustrated with the whole thing that we proposed that the IETF dispense with minutes taking entirely and just declare the automated transcripts to be the minutes. This suggestion was not popular and after looking at the transcripts in more detail I have some sympathy for the objectors, as they're pretty hard to work with (more on this below).

Not totally deterred, I decided to take another run at a technical fix: maybe the transcripts aren't good enough, but what if we could just automatically make minutes from the transcripts? Fortunately, I had another meeting to attend the next week—and hence a need for a side project to distract me—and a copy of Claude Code, and thus ietfminutes.org was born.

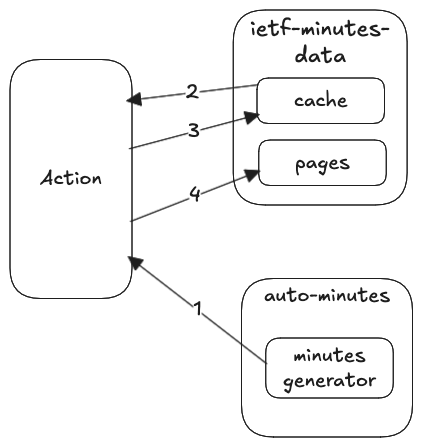

Architectural Overview #

The diagram below shows the overall architecture of ietfminutes.org.

The basic concept here is unbelievably simple and obvious: take the transcripts that we're already generating and ask an LLM to make minutes out of them. Here's the prompt, with some re-flowing.

You are an expert technical writer for the IETF. Convert the following meeting transcript

into well-structured meeting minutes in Markdown format. It should contain an account

of the discussion including any decisions made.

Session: ${sessionName}

Requirements:

- Start with a # header with the session name

- Include a ## Key Discussion Points section with bullet points

- Include a ## Decisions and Action Items section if applicable

- Include a ## Next Steps section if applicable

- Be concise but capture all important technical discussions

- Use proper Markdown formatting

- Focus on technical content and decisions

- Remember that IETF participants are individuals, not representatives of

companies or other entities

- Remember that consensus is not judged in IETF meetings; it is established separately.

It's OK to say things like "a poll of the room was taken" or

"a sense of those present indicates..."

The transcript is in JSON format with timestamps and text. Here is the transcript:

${transcript}

Generate the meeting minutes:`; The code to talk to the LLM is just a trivial use of the service API, namely feeding it the prompt with the transcript filled in and getting back the summary.

The vast majority of the code is plumbing, specifically:

- Retrieving the list of sessions from the IETF "datatracker"

- Retrieving the actual session transcripts from the Meetecho conferencing system used by IETF

- Formatting the site and publishing it

Retrieving the Session Transcripts #

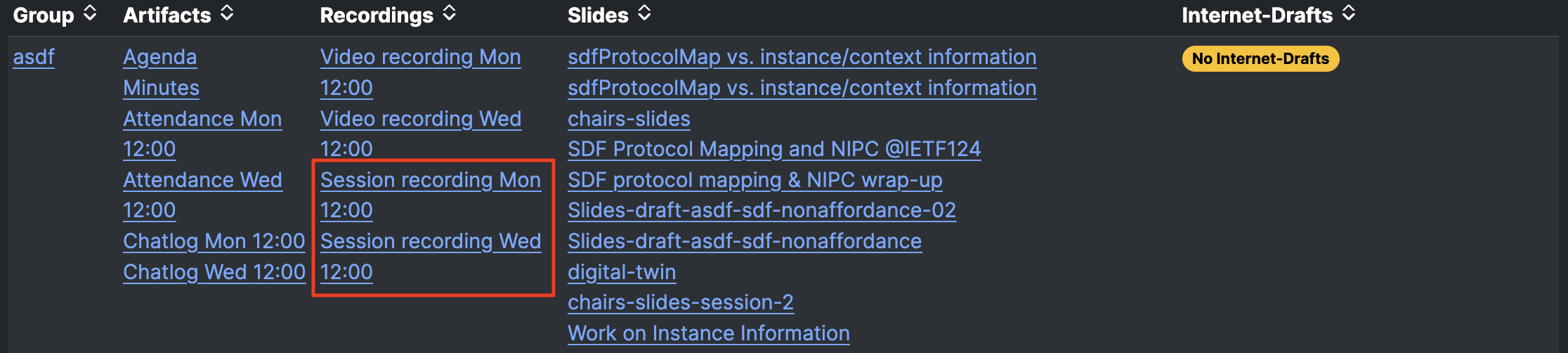

The first step is just to find the relevant sessions. Most IETF WG meetings happen at the thrice yearly in-person IETF plenary meeting[1] The IETF uses a homegrown tool called datatracker to manage IETF drafts, agendas, meeting materials, proceedings, and eventually the minutes. Datatracker is a somewhat aged—but actively maintained—Django app. Unfortunately it's really designed to be used as a Web site and doesn't have a complete published API, but rather just exposes its object model with tastypie, so I had to do a bit of reverse engineering. At the end of the day I ended up using the proceedings page (e.g., https://datatracker.ietf.org/meeting/124/proceedings). Each session (WG or otherwise) is linked to by a link named "Session Recording":

The session recording link goes to a custom media player, which has the video

(actually an embedded YouTube player), which also has an embedded

transcript player. The player has a deterministic URL pattern, such

as https://meetecho-player.ietf.org/playout/?session=IETF124-PLENARY-20251105-2230.

Each session is defined by a session ID, and then The transcript itself is at a deterministic

location based on the session ID. This gives us a straightforward process:

- Parse the proceedings page to find all links labeled "Session Recording"

- Extract the session ID from the link to the player

- Construct the link to the transcript and download the transcript from the URL.

The transcript itself is a JSON file consisting of timestamped fragments of transcribed speech:

[

{

"startTime": "00:00:00",

"text": "yesterday I was over-achieving High is bad but I joke you see that Yeah, that's the point You know overnight was good Overnight was good for that please So this is the ASDF meeting meeting if you're here because you like trains, you'll have to go somewhere else Because the AASDF kid likes trains. I'll start in one minute, and we're with, we need a note taker yet Somebody take notes because I'm speaking Jan, are you taking a Yeah, thank you very much I'm doing the talking and Lorenzo is doing the projecting and don't ask him questions because he can't talk talk. He's allowed to. He just is unable to um and uh so yeah so oh, so we've actually changed the agenda already already. So note, well, you saw it yesterday you saw it everywhere else Please be nice to each other This is not the latest slide, but that's okay um and um yeah please be nice to each other um next item is we are going to continue with a version virtual interims. And And pardon me?"

},

{

"startTime": "00:02:02",

"text": "Yes. Thank you And so we have been doing them at, what time was it, your time, 9 a.m 9 a.m which worked well in in Europe and in in Korea and even in California and I was the odd man out at 3 a.m and i did not make it uh uh there um so the question is is that still a good time for everyone? who wants to be involved? Would you like to propose other times? If not, do we want to have one in December? beginning of December? Yes, no, yes Okay we're going to pick the first Wednesday in December whatever date that is and we're going to go with that at that same 9 a.m Eastern European time, yeah, which is I guess 7 a.m .T.C. Is that right? yeah okay we'll post that up and then I think we'll post a second one for maybe the second week of January. The first week is usually a toast Any great objections? to that and then we'll discuss what we're, where we're doing from there Anyone remotely want to comment on that? No Okay let's move on to the first real agenda item which is non-affordance and click either"

},

...

]Minutes Generation #

The next step is to send the transcript to the LLM and ask it to make the minutes. As I mentioned above, this is conceptually simple but turns out to be somewhat slow (order 10s of seconds per session), which requires some careful handling. At the end of the day, I ended up doing two things:

-

Caching the generated minutes so that you can run the rest of the code (generating the site, uploading it, etc.) without waiting for the LLM. This also makes things easier when running it in automation (see below).

-

Switching from Claude Sonnet to Gemini Flash, which is a lot faster, as well as cheaper. It's still too slow to run in the inner loop when you're testing, but made it much faster when I wanted to backfill all the minutes for the past 5 years or so.

This phase is where all the smarts are, but TBH both models do a pretty reasonable job (see below for more on this). I did run into one class of error that was bad enough to be worth doing something about. For some reason, the output would have Markdown fences, such as:

```

of

```markdown

in the output. This idiom is used to indicate to the Markdown processor that you want the code rendered as literal code rather than processed, which isn't what we want here. I ended up just using a post-processor to remove anything like this.

Generating the Site #

I wanted to host this on GitHub pages, which meant I needed a

completely static site. My first cut at this was just putting the

generated MD in the gh-pages branch and letting GitHub render the

HTML. This works OK, but you end up stuck with GitHub's styling

choices, and after fighting for a while with trying to configure

Jekyll I decided it would be easier to

generate the HTML locally. That way, I could use

11ty, which I was already familiar with, and I

could test out the HTML generation without having to

push it to GitHub and wait for it to generate the pages.

I ended up with a three step process:

- Generate the minutes in markdown using the LLM (see above).

- Starting with the generated minutes, generate the site markdown, as well as the site index.

- Using 11ty, generate the site HTML.

The nice thing about this structure was that it made it easy to work incrementally: once I had generated minutes for a few WG sessions I was able to generate a basic site and then iterate on the wrapping for each page (headers, footers, etc.), the styling, etc. without having to hit the LLM again, which makes things both faster and cheaper. It also meant that once I had things the way I wanted I could just generate the minutes for the rest of the WG sessions of interest and regenerate the whole site. It also made things easier when I went to automate everything later.

Importantly, none of this code runs in the critical path because the site is entirely static, which convenient for deployment reasons because it means I can run on totally free infrastructure, but also for security reasons because there's basically nothing to compromise. This is good because despite being a security professional, I don't really have that much experience building a secure dynamic Web site using modern tools like Django or RoR, so I prefer to design things in as fail-safe fashion as I can.

Automation #

In my first cut of the system, I just ran everything on my local machine, then committed the site to the GitHub pages branch and pushed it to GitHub. This is fine for generating minutes for meetings that happened months ago, but what you really want is to have the minutes generated in quasi real time, shortly after the sessions themselves happen (the transcripts take a while to generate, so it won't be in real time).

There are lots of options here, but given that I was already publishing with GitHub pages, the easiest approach seemed to be to use GitHub Actions. There's no Web hook for when the minutes are published, but actions supports scheduled events, so I can just poll the site periodically. The tricky part here is maintaining the cache of generated minutes files, as we obviously don't want to have to regenerate all of them every time a new session transcript is published.

What I ended up doing was having two repos:

- ietf-minutes-data for the GitHub pages site and the cache of generated minutes, which is stored as a branch.

- auto-minutes for the code to generate the minutes.

The reason for the two repos is that I need the action to have write access to the repo so it can re-commit the cache, but I didn't want to give it write access to the code itself in case I screwed something up or there was a security issue. This way, even if there is a total compromise of the system, the worst thing that can happen is damage to the data. I'm not a GitHub actions wizard, so there might be some other way to do this, but I like to keep things simple.

The action itself is attached to the ietf-minutes-data repo.

GitHub doesn't do a very good job of running the job at

the scheduled time, but it seems to eventually run and

as I mentioned above, the transcripts take a while to

show up, so it's not that big a deal. Once it does fire,

here's what happens:

-

Check out the

auto-minutesrepo, which has the actual code andnpm installall the dependencies. -

It then checks out the

cachebranch ofietf-minutes-data, containing all previously AI-generated minutes. -

Run the minutes generator and generate minutes for all sessions that haven't been generated yet. This is the only stage that uses AI.

-

If there are new minutes in the cache directory—because there were new sessions—commit them to the cache branch and push it back to GitHub. If no new minutes were generated, the script aborts at this point.

-

If there are new minutes, then we need to regenerate the site itself, and then deploy it to GitHub pages.[2] This is fast because it just means regenerating the metadata pages (indexes and the like) and running 11ty to generate the HTML.

It took some messing around to get this running because I was doing a lot of this on a plane (see also "not a GitHub actions wizard", supra), but once I had it up and running it all worked smoothly for the rest of the meeting.

Tuning Output Quality #

As I said above, the output quality is pretty good, but it's far from perfect. With lot of examples to work from, you can see some consistent patterns.

- Mis-rendering people's names (e.g., "Martin Thompson" rather than "Martin Thomson", "Eric Griswold" for "Eric Rescorla").

- Mis-identifying speakers entirely (e.g., Rich Salz as me).

- Mis-rendering technical terms (e.g., "Quick" for QUIC". This is actually an interesting one because it gets it right the first time).

- Misstating the result of a discussion, e.g., saying there was consensus if there wasn't.

For instance, here is a session I was in, which has a number of examples of the above.

The high level challenge here is that the model itself is kind of a black box; we can of course tweak the prompt, but it's difficult to predict if that's going to have the right effect. You can obviously try it with a single problematic session (I even added a mode specifically for that), but even if you get the right result on that specific session, you don't know if it will make things worse on some other session, so it's hard to know how aggressive to be. On the one hand, the prompt is already kind of ad hoc, but you also don't want to be doing a random walk through the prompt space. I suspect the pro thing to do is to actually fine tune a model, but I'm not sure I have enough energy for that for what is at the end of the day kind of a hack.

With that said, there are a few obvious things to do that seem likely to improve quality.

Generate the Transcript Ourselves #

As I said above, I'm just using Meetecho's transcript generation function. Some brief inspection of the transcripts it is emitting suggests that there's a fair amount of room for improvement, and if there are errors in the transcript, this has the potential to affect the generated minutes (although in some cases I've actually seen the minutes generation fix errors in the transcript!).

The obvious thing to do is to instead do the STT ourselves from the audio recording using a more advanced STT model (e.g., Gemini Audio Understanding). Unfortunately, for reasons I don't quite understand, the IETF doesn't presently make the audio recordings available. It's probably possible to use youtube-dl or the like to get the video and then pull out the audio, but that's not really the way I would prefer to do things. This is a TODO for the future when the IETF cracks the code on how to host audio files.

Identify the Speaker Explicitly #

As noted above, a persistent problem is misidentifying speakers, either by mis-rendering their name or getting the wrong person altogether. This is kind of irritating because the information is actually in the system somewhere. The IETF actually has a fairly fancy audio setup, with at least four different audio inputs (sometimes with multiple microphones in each).

- Chair

- Presenter

- Room (for comments and questions)

- Remote

All of this stuff feeds into Meetecho as well as into the room speakers, but Meetecho's transcript generation removes which channel the audio came in on. If the transcript were just annotated with the input channel, then it would make it a lot easier to figure out the person who was speaking. It's actually possible to do better than that, though, though each case needs special handling.

Chair #

Your typical IETF WG has one or two chairs and the IETF datatracker already records the chairs, so it's straightforward to reduce the chair mic down to one or two people. In my experience, the chairs typically don't identify themselves, so you'd probably need some speaker recognition (perhaps augmented by the video stream) to determine which chair was talking. Still, just knowing that it was a chair would be helpful.

Presenter #

Each WG session is likely to have a number of presenters, but there's a fair amount of metadata available to determine which one is which, including:

- The agenda listing who is presenting

- The chairs announcing the next slot

You'd need to play around with things a bit to determine whether it was best to try to do something smart or just feed all of the information into the model and let it figure things out, but again, just knowing that some audio came from the presenter mic would help.

Room #

It used to be very difficult to know who was speaking at the room microphones, but as part of the effort to make participation remote friendly, IETF now has a unified queue management system between remote and in-room mics, and so if you want to speak at the room mic, you need to get in the queue first. This means that even though Meetecho doesn't necessarily know who is actually speaking, it knows who is at the head of the queue and if they are local or remote, so you could probably just assume that if it's the in-room mic, it's the person at the head of the mic line. This won't be perfect, because sometimes people but in or reorder themselves, but it would be a lot better.

Remote #

This is actually the easiest: you can't remotely participate in an IETF meeting without using the remote Meetecho tool, and that requires registering, so Meetecho knows exactly which individual is speaking over a given remote channel.

Ground the Output in Existing IETF Information #

Finally, there's a broader opportunity to ground the output in existing IETF information, and in particular to give the LLM a hint about some commonly used words and phrases. For example, we could provide:

-

The list of people actually in a session so that it knows that the most likely names are.

-

The WG agenda so it knows the presentation order (see above).

-

The presentations and documents associated with a given WG session.

-

A list of common terms and acronyms, so that it knows that if you talk about "QUIC" it's probably not "Quick".

I've been looking at this a bit; maybe for next time.

Take Homes #

This is a pretty simple project, but it gave me the opportunity to play with a bunch of AI tools, and while I'm not any kind of expert, I do think there are some useful lessons.

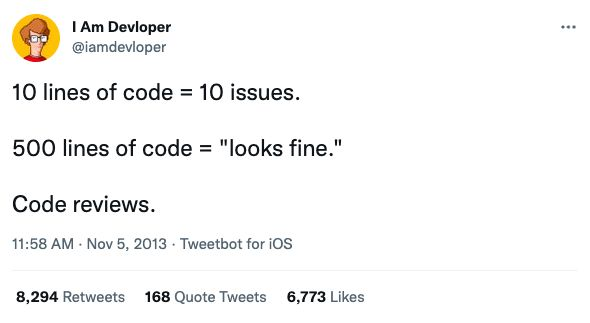

AI Code Generation #

The vast majority of this code was written with AI coding tools, mostly Claude Code. Mostly, I just pointed Claude Code at the APIs and told it what I wanted and let it rip. I did some light review of the code to see if it seemed to be doing what I wanted, but if I'm being honest, it was mostly this:

I fear this is the future of AI coding assistance, as it's very difficult to maintain attention when reviewing big PRs.

This isn't ideal, but this particular site is pretty low stakes and one of the advantages of the split architecture I'm using is that even if you make some pretty egregious mistakes in the code, there's (hopefully!) not too much that can go wrong beyond having the site itself end up wrong.

I know lots of other people have used AI coding tools, but I wanted to form my own opinion, so FWIW, here are some thoughts.

First, my impression here is that Claude is really good at the routine stuff like knowing how to scrape a site, parsing the HTML, figuring out which parts of the DOM to examine, etc. It wasn't as good at figuring out the overall architecture, but if you ask it to do the job in pieces and correct it when you're unhappy, it works better. This matches my experience using Gemini and herding the AI seems like it's a skill that we're all going to have to learn.

It's especially helpful to have a tool like this for doing routine stuff you're bad at or don't want to learn. For example, I suck at CSS, but I also don't want to do anything complicated, so generally if you just tell Claude what you want and don't care too much about the exact details of how things look it does an OK job. there's still a bunch of stuff where you have to be like "no, I really want that menu item 20% lower", and in some cases you eventually have to get in there and fix things yourself, but again, having a computer do the busywork is great.

The time scale is oddly inhuman: Claude is incredibly fast at generating a big pile of code, but if you want some trivial change you still somehow end up with a lot of think time latency, where you're just sitting and staring at the screen waiting for Claude Code to get back to you. I did a lot of this work sitting in meetings, so I could just tune out and wait for the model, but if this is all I was doing, then I'd want to develop some different work rhythms. I've heard of people having a lot of different outstanding tasks and waiting for them to complete and reviewing them, but this isn't something I've tried much yet.

Summarization #

This is the first project I've worked on where I didn't just use AI to generate the code but where AI was actually a core piece of the operations. It's a really different experience from normal engineering because the AI is basically a nondeterministic black box that you have to kind of talk into doing what you want. This has a few implications that take some getting used to.

First, it's really hard to know what the impact of any particular change to the prompt will be. For example, we've been having a sort of persistent problem where the model would report that there was consensus on a certain point, even though the chairs hadn't declared consensus. Mark Nottingham and I spent some time tweaking the prompt trying to get it not to declare consensus incorrectly, but the results really weren't quite what we were hoping.

Second, because the system is nondeterministic you don't get exactly the same results with the same inputs, which makes things hard to test. It's of course trivial to regenerate any individual session as a test but the problem is that just because it works once doesn't mean it will work reliably. I'm very curious what other people do in this kind of situation, but just on first impression this feels a bit like a statistical process control problem, where we'd have to do something like A/B tests with a given prompt (or, again, try to fine tune the model).

From a product delivery perspective, I also noticed that this is also a bit confusing for other people, who are used to software behaving predictably. I've gotten a number of bug reports that are basically of the form "the generated minutes don't seem quite right", and my response is kind of the same: ¯\_(ツ)_/¯. As noted above, I have a few ideas for how to improve things, but fundamentally, I don't know how to fix specific defects.

Integration into the process #

Even as-is the minutes are reasonable quality—and the bar here is pretty low—but they definitely can contain errors (as they say "AI can make mistakes"). The idea here isn't to replace the minutes on the IETF proceedings but rather to make the process of generating them easier. I'm not going to judge you if you just take what I've generated and submit it as the minutes, but a better practice is to at least give it a once-over to fix any glitches before submitting them.

The bigger picture #

This project wouldn't have happened without modern AI tooling. Even ignoring that it depends on AI to do the summarization, I'm not sure I would have gotten around to doing it without being able to use AI to write most of the code for me.

There's nothing particularly complicated here, but there's a lot of routine but fiddly work (scraping the site, finding the exact parts of the DOM to extract, talking to the AI API, tweaking the site look and feel, etc.) that takes time. In many cases, these tasks require you to learn something you don't already know, isn't very conceptually interesting— what are the arguments to this API call?—and will quickly forget. This is all friction in the coding process that the AI lets you just skip over, because the assistant can usually figure it out. I know there are a bunch of debates about how much the AI is really doing that versus just pattern matching from a lot of other people's examples, but as a practical matter, it kind of doesn't matter.

On the other hand, while it's amazing to get so much done with so little personal effort, there's also something a bit unsatisfying about it, seeing as you didn't do much of the work yourself, but instead supervised some AI doing it. This experience may be familiar to more senior technical people who have moved from doing a lot of actual software engineering to leading projects, as a tech lead, architect, or CTO; you do a lot more architecture and steering and a lot less actual hands-on work. The vague unease that you don't really understand what's going on isn't new either; of course what's different here is that—at least in theory—your co-workers understood it and you trusted them, whereas here it's just you and the machine, at best a p-zombie and at worst a fancy random number generator, but nevertheless I know a lot of more senior engineering people miss the feeling that they did it themselves as opposed to leading other people who did the actual work.[3]

A huge amount has been written about how to use these tools safely and efficiently; this is understandable given the general efficiency-maxxing ethos of technology. Less explored, however, is the question of how to use them enjoyably. One of the great things about being a professional software engineer is that programming is fun, not just the feeling of building something cool, but the experience of flow, when the code just seems to come effortlessly from your brain to the keyboard. At least for me, the experience of using AI tools like Claude Code is totally different: you ask the machine to do something, then sit and wait for it to spit back a response; this is the opposite of flow. I can't help but wonder whether some of the resistance to AI in the software engineering community is about AI taking the fun out of the experience of programming. I certainly feel some of this, at the same time as I try to remind myself that what's really important is accomplishing stuff, not whether you did it personally or had fun, and that means using the best tools you can and having the best people do the work, even if those people are robots,[4] but that doesn't mean I don't want to have fun at the same time.

There is actually a plenary meeting at the IETF plenary. I'm using the term "plenary" here to distinguish from interim meetings. ↩︎

Now that I'm writing this, I see that it's actually a bug because it means that if I change the templates but there are no new sessions, things don't get regenerated. This is a side effect of the fact that I was originally doing things by hand and then wrote the automation during the IETF meeting, but after I had the templates written, so there were always new sessions. ↩︎

It's not uncommon to see very senior people whose job is really to set the direction for the organization instead jumping in and trying to write code. Sometimes this is what's needed ("all hands on deck") and but in my experience it's far more often about prioritizing your feelings that you're doing "real work" over the thing you should be doing. ↩︎

See also Thomas Ptacek's My AI Skeptic Friends Are All Nuts ↩︎