DNS Security, Part II: DNSSEC

Posted by ekr on 24 Dec 2021

This is Part II of my series on DNS Security. (see part I for an overview of DNS and its security issues). In this part, we cover Domain Name System Security Extensions, popularly known as DNSSEC.

As documented in part I, baseline DNS is tragically insecure and the DNS community has been working on fixing it for pretty as long as I've been working in Internet security (the original RFC for DNSSEC was published in 1997, but of course people had been working on it for quite some time before that). The purpose of DNSSEC is to provide authenticity and integrity for DNS records, so that when you get a result you know it is correct. It does not do anything to preserve confidentiality of the query or the results.

Overview #

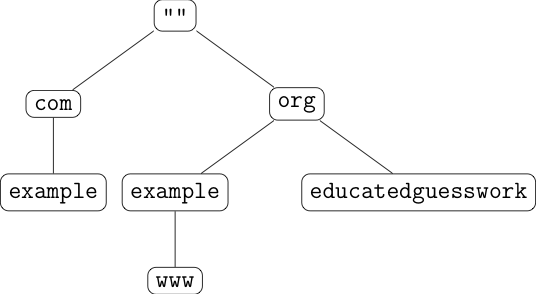

The basic idea behind DNSSEC is straightforward: digitally sign the entries in the database ("resource records"). I mentioned before that names exist in a hierarchy: In DNSSEC, each node in the hierarchy has a key which is used to digitally sign all the records at that node.

For instance, in the picture above, there would be a single root key which then signs

keys

for com and org. Similarly, com (the parent)

signs the key for example.com (the child), which is then used to sign the

records for example.com. The logic here is:

-

You know the root key (because it's been preconfigured in some way).

-

You know the key for

combecause the root attests to it by signing it. -

You know the key for

example.combecausecomattests to it by signing it. -

You can trust the IP address for

example.combecauseexample.comsigned it with its key.

When a client receives a domain, it verifies it by checking all the signatures from the root down to the domain and then checking the signature over the records in the domain.

Note: the technical terminology here is "zone", which refers

to a portion of a tree controlled by a single entity.

For instance, com is one zone and example.com another,

but www.example.com might be part of the example.com

zone if it's managed by the same people. This is a very important distinction if you're working

with the DNS, but here I'll mostly be using "domain" and "zone"

interchangeably.

There are a number of important properties of this design, as detailed below.

DNSSEC authenticates data not transactions #

Because DNSEC signs objects, those signed objects are self-contained and it doesn't matter how you receive them. This means that as long as you verify the signatures you don't need to trust the DNS server you got them from, at least as far as the correctness of the records goes. It's even possible to embed DNSSEC-signed chains in other protocols, such as TLS. Indeed, one way to think of DNSSEC is that it's just end-to-end authenticated data carried over an insecure transport.

This also means that it's possible to have DNSSEC operate entirely offline, where the records are signed with some key that is never on a machine connected to the Internet. This is by contrast to TLS, which requires the key to be available to the server all the time. This was considered a very important design criterion at the time, but in practice I'm not sure that this has turned out to be that great a decision. In particular, some of the design choices downstream of that requirement have turned out to be questionable.

One of these decisions is that it's hard to keep the contents of a given zone private, which a lot of enterprises don't like.[1] The reason for this is that there needs to be a way to say that an arbitrary name doesn't exist, but you can't predict all the names in advance. In an online system like TLS, you would just say "no" to whatever the question was, but that doesn't work in an offline system. DNSSEC handles this by having a record called NSEC which says "the next name in alphabetical sequence after name X is name Y". The problem is that this can then be used to enumerate all the names in a domain, just by repeatedly asking "what's next?" like a five year old. The DNS community has spent a lot of effort in trying to design a system that wouldn't have this problem, but the strongest current mechanism (NSEC3) turns out to be not that effective, for technical reasons outside the scope of this post.

Limited Protection Against Censorship #

While DNSSEC provides protection against someone inserting false data into your DNS resolution, it does not protect against someone who just wants to stop you from accessing a given site because that attacker can just suppress the DNS response or alternately inject a bogus response of their own. The signature won't verify of course, but it doesn't matter because you still don't have the answer. As a practical matter, what happens is that the resolver reports an error, and you know things have failed, but it doesn't really matter because you still can't get where you are trying to go.

Cryptographic Algorithms #

One property of DNSSEC that has gotten a lot of negative attention--for instance by Ptacek and Langley a few years ago--is its use of weak cryptography. When DNSSEC was first designed, it used RSA with 1024 bit keys for signatures. This is no longer considered secure--the minimum key length for the WebPKI has been 2048 bits since 2012-- and the system has very gradually been moving towards using longer RSA key lengths (2048- or at least 1024-bit) or more modern elliptic curve signatures. It appears that most domains now have 2048-bit keys as well as 1024-bit keys, which I suspect is a backward compatibility mechanism, as well as some elliptic curve keys.

In general, backward compatibility is a big challenge for an object security protocol like DNSSEC. The issue is that you need whatever data you produce to be readable by everyone--in contrast to an interactive protocol like TLS, where you can negotiate what to do and detect when things break--so for instance, if you introduce a new algorithm it has to be done in such a way that it doesn't break old verifiers. This means that you have to (1) have the presence of that algorithm/signature not be a problem and (2) provide signatures with the old algorithm until those old verifiers have upgraded to support the new algorithm or until you no longer care about them--which may be more or less indefinitely.[2]

This turns out to be especially difficult for the root keys, which are preconfigured into every resolver and which required a quite ornate procedure to roll over back in 2018, including the follow dire warnings:

Once the new keys have been generated, network operators performing DNSSEC validation will need to update their systems with the new key so that when a user attempts to visit a website, it can validate it against the new KSK.

Maintaining an up-to-date KSK is essential to ensuring DNSSEC-validating DNS resolvers continue to function following the rollover.

Failure to have the current root zone KSK will mean that DNSSEC-validating DNS resolvers will be unable to resolve any DNS queries.

In the event this seems to have gone relatively smoothly, albeit after quite a bit of effort and a delay of a year.[3]

Limited Trust #

DNSSEC has a much stricter trust hierarchy than the WebPKI certificate

system: in the WebPKI, pretty much any CA[4]

can sign any domain name. This means that even if you are example.com and

have all of your certificates from Let's Encrypt, an attacker

can compromise another certificate authority and get it to issue a certificate

for example.com. The WebPKI has had several mechanisms bolted on after

the fact to control this kind of attack[5]

but this is generally recognized as kind of a misfeature.

By contrast, in DNSSEC, only the entity responsible

for .com can sign the keys for example.com

which means that the attacker needs to compromise either that entity

or one of the places it gets its data sources (e.g., a domain name registrar),

which is a much smaller set than the 100 [Updated: 2021-12-24. This originally

said 1000. Thanks for Phillip Hallam-Baker and Ryan Hurst for the

correction.]

or so WebPKI certificate authorities

accepted by a major browser. This also has some issues in terms of deployment, as we'll see later, but from a security perspective it's a win.

At this point it's natural to think that you might replace the WebPKI with DNSSEC signed keys, and in fact DNS Authentication of Named Entities (DANE) attempts to do precisely this. I plan to cover this in a future post.

DNSSEC Does not provide privacy #

DNSSEC does not do anything to provide privacy. It's the same old DNS as before, just with signed records, so the privacy properties are just as bad as before.

Incremental Deployment #

Because DNSSEC is a retrofit, it had to be added in a backward

compatible way. As a practical matter, that meant an incremental

rollout in which some data was signed and some was not. The problem

here is setting the client's expectations correctly: suppose that

you try to resolve example.com and you get an unsigned result.

Does this mean that example.com is really unsigned or that it

actually is signed but you're under attack by someone who wants

you to think it's unsigned (obviously, they can't send you a valid

signed record)? On the

Web this is handled by having two different URL schemes, http:

and https:, with https: telling the client to expect

encryption and to fail if it doesn't get it, but that doesn't

work with DNS where the names are the same.

The solution is to have an indication in the parent zone about the status of the child. Specifically, when you ask a parent which is using DNSSEC for information about the child, then one of two things happens:

-

If the child is using DNSSEC, the parent sends a delegation signer (DS) record to provide a hash of the child's key.[6]

-

If the child is not using DNSSEC, the parent response with a next secure (NSEC) record which indicates that the child is not using DNSSEC.[7]

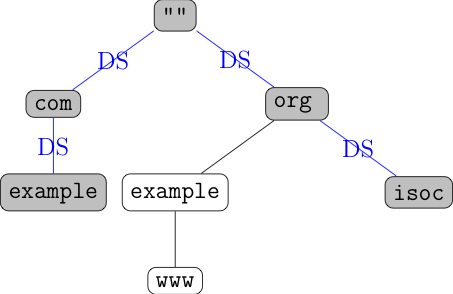

The figure below shows this what this looks like:

In this figure, the shaded domains are DNSSEC signed, and the empty ones are

unshaded ones are unsigned. Every time a child node is signed, the

parent has a DS record attached indicating the key.

[Update 2021-12-24: Updated the figure to show isoc.org, which really does

have a DS record. Thanks to Thomas Ptacek for pointing this out.]

In order for this to work properly, both the NSEC and DS records have to be signed. The DS record has to be signed because otherwise you can't trust the key; the NSEC record has to be signed because otherwise you can't trust the claim that the child is unsigned (this is called "authenticated denial of existence"). This also means that in order for a child to be secured with DNSSEC, its parent needs to be secured, which means its parent needs to be secured, and so on all the way to the root. The result is that DNSSEC mostly has to be deployed top down, with the root being signed first and then the top level domains, etc.[8]

Putting this all together, when a client goes to resolve a name, it gets one of three results:

-

The result is validly signed all the way back to the root and therefore is trustworthy (RFC 4033 calls this "Secure").

-

The result is supposed to be signed but actually can't be verified for some reason such as an invalid signature, broken keys, missing signatures, etc. ("Bogus")

-

The result is not supposed to be signed (and presumably isn't) ("Insecure")[9].

As with any cryptographic system, it's not really possible to distinguish between error (misconfiguration, network damage, etc.) and attack, but as a practical matter any Bogus result has to be treated as if it were an attack and the client has to generate some kind of error, whether it's just failing with an error ("hard fail") or warning the user with an option to override ("soft fail). In either case it's not OK to just silently accept the result and move on because then an attacker can just substitute their own data with an invalid signature and trick you into accepting it.

DNSSEC Deployment #

So how much DNSSEC deployment is there? There are a number of ways of looking at this question.

- How many top-level domains (

.org,.com, etc.) are signed? - How many second-level domains (

example.org, etc.) are signed? - What fraction of resolutions verify DNSSEC signatures?

- How widely do end-user clients (typically stub resolvers) verify DNSSEC signatures?

Top-Level Domains (TLDs) #

As I noted above, as a practical matter having a domain be DNSSEC

signed requires that the parent domains be signed all the way to

the root. Conversely, this requires that the root be signed and that

most or all of the TLDs be signed as well, otherwise nobody can

get their domains signed. It took a while, but this part is

actually going pretty well. The root has been signed for sometime

and as shown in ICANN's most recent

data, the

vast majority of TLDs (1372 out of 1489) are now signed,

and this includes all the major ones like .org,

.net,

.com, and .io as well as some perhaps less

exciting TLDs such as .lawyer, .wtf, and .ninja (yes, seriously).

You're probably not going to be too sad to learn that .np (Nepal)

is not signed, though I guess they should get on it.

Registered Domains #

You will sometimes hear that "TLD X is signed" but that just means that the records pointing to its children are signed, not that the actual records in its children are signed. As described above, this just lets you determine whether the children have deployed DNSSEC and if so what their key is, but it doesn't automatically make those domains secure. This is necessary for incremental deployment but makes the situation a little confusing.

However, you're not going to be serving your website out of

.ninja (even if you're an actual ninja, though you

can have ninja.wtf), so what's more important for

security is how many of the second level and lower domains that you

can actually register sign their contents.

Complete data doesn't

seem to be available here, in part because the operators

of the TLDs don't make their contents publicly available;

you can access individual records but not just ask for all of them.

For instance, if you want to have access to the contents[10]

of .com and .net you need to contact Verisign.

Taejoong Chung has a good

rundown of the

sources for their 2017 paper

This is remarkably hard to get a straight answer to, for several

reasons. First, domain name operators are often unwilling to publish

complete data or their domains so we have only partial data. Second,

what often seems to get counted is DS records rather than signed domains, and there can

be more than one DS record per domain. With that said,

we do have a few sources of data

here (mostly gathered from the Internet Society DNSSEC statistics site,

but they all tell the same basic story, which is of

a fairly low level of deployment.

For example, data collected by

Viktor Dukhovni and Wes Hardaker at DNSSEC-Tools,

has around 17 million DS records (indicating DNSSEC signed domains), while

Verisign reports there are around

around 360 million registrations,

so this gives us below 5% deployment, though this may be a slight overestimate due to multiple DS records. [Update 2022-01-22: Viktor Dukhovni informs me that they are counting RRSets and not records, so these numbers are exact.]

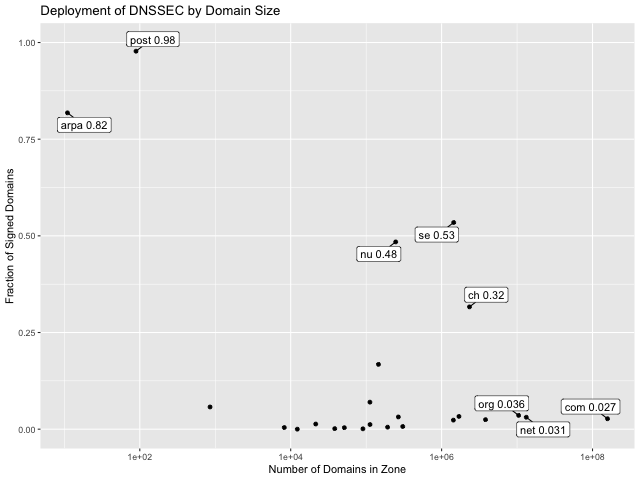

Digging deeper, the figure above shows the number of signed domains by TLD size

(data from StatDNS).

As above, the level

of deployment is quite low across the board, with all the big domains under

4% (.com is at 2.8%). The biggest domain with significant deployment

is .ch (Switzerland) at just below 1/3 deployment

with about 2.3 million domains) and the only substantial sized

domain with even over 50% deployment is .se, at 53%.

I'm not sure precisely why

the fraction is so high for .ch and .nu but

the large fraction in .se is probably due to financial

incentives to enroll people, as reported by Chung et al..

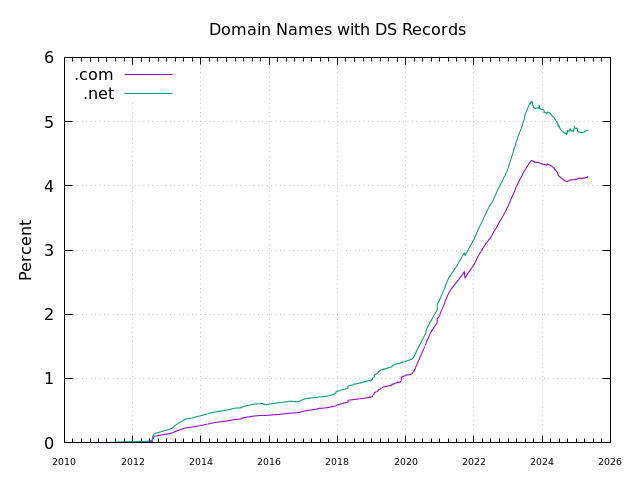

There is some evidence that the situation is improving slightly. Here's Verisign's

data for DNSSEC deployment in .com and .net (which they

operate):

As you can see, there was a big bump in 2020 which seems to be flattening in 2022. However, even that bump corresponds to about 1 percentage point a year, so unless things really accelerate, we're looking at quite some time before the majority of domains are DNSSEC signed.

Deployment of DNSSEC Validation #

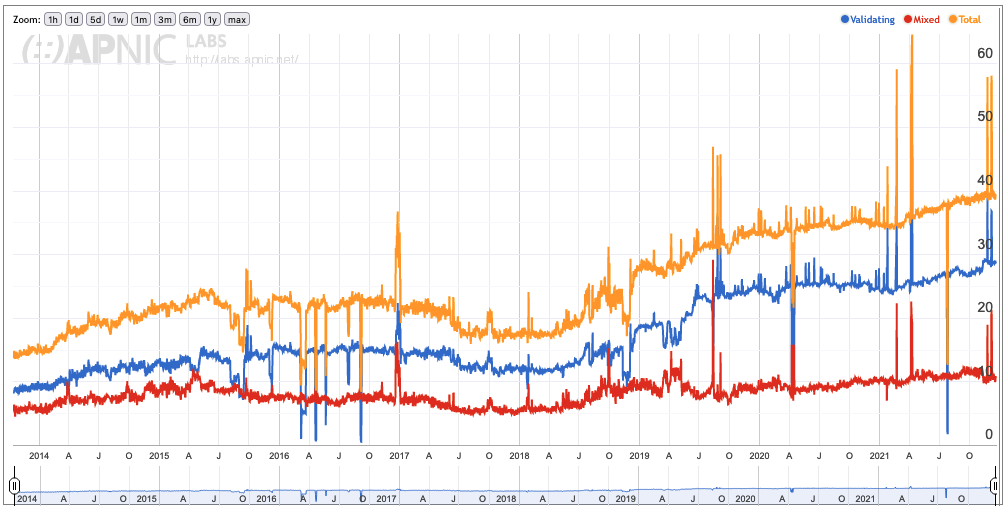

Having a domain DNSSEC-signed doesn't do any good if nobody checks, so how often are validations checked? The best data here comes from APNIC, which reports a validation rate of about a little under 30%:

.

.

Note: I'm not sure what "partial" validation means, so apparently about 40% of the survey has some validation. A lot of this seems to be driven by the use of public recursive resolvers like Google Public DNS which do DNSSEC validation.

Deployment of Endpoint DNSSEC Validation #

Although there is a significant amount of DNSSEC validation, to the best of my knowledge the vast majority of it is in recursive resolvers. Although most operating systems have some built-in DNSSEC validation capability, at least Mac and Windows don't do it by default. Similarly, even Web browsers which have their own resolvers--like Chrome--don't do DNSSEC validation. I understand that some mail servers do it for DANE keys, but I don't have any measurements of the scale.

Validation at the Endpoint versus the Recursive #

As noted above, nearly all validation happens at the recursive resolver rather than at the endpoint. This does provide some security value in that it protects against most attacks that are upstream of the recursive resolver, whether they are on-path or off-path. However, it doesn't prevent two very important classes of attack:

-

Attacks between you and the recursive resolver. For instance, an attacker on the same network can inject their own responses. There are lots of situations where people are on untrusted networks--consider that their are probably a lot of network links between you and your ISP's resolver--so this is a real concern, especially if your link to that resolver is not cryptographically protected, which is true of classic DNS, though not of some of the new ere emerging protocols such as DNS over HTTPS.

-

Attacks by the recursive resolver. The recursive resolver can basically do anything it wants. If you're on a malicious network then it can simply respond to every query with its own response. They can also invisibly censor you just by removing responses. Moreover, there are plenty of cases where we know this happens already that people tend not to think of as malicious, for instance blocking at schools or redirecting you to a captive portal.

Obviously, if you validated the DNSSEC records yourself neither of these would work (as noted above, it would still be possible for the recursive resolver to censor specific domains, but it couldn't do so invisibly), but if you don't, then you're just trusting the recursive resolver to validate things and so you can't detect these forms of attack, and it's not safe to use the DNS for anything which relies on integrity (see Ptacek on this as well). This is sort of an odd position to be in because as a general matter you're just trusting some element on the network that you've never heard of. Even if you trust the network provided by your ISP, why would you trust the one provided by your airport or coffee shop?

So, why don't endpoints validate? There are two main reasons. First, there are concerns about breakage. Right now, endpoints can resolve any domain regardless of DNSSEC status, but if they turn on DNSSEC validation, they will inevitably start to experience failures for some domains. To the extent to which these failures are due to actual attack, this is a good thing, but if many of them are due to other "innocuous" issues (e.g., misconfiguration) then that leads to a bad user experience because users suddenly will be unable to reach sites which they previously were able to reach. This then gets blamed not on the actual culprit but on the entity who made the change that resulted in breakage (what I've heard Adam Langley call the "Iron law of the Internet", namely that the last person to touch anything gets blamed), in this case whoever turned on endpoint validation. For this reason, vendors of end-user software such as operating systems or browsers are very conservative about making changes which might break something.

There are two primary potential sources of breakage for DNSSEC resolution (1) misconfiguration of the domain (e.g., a broken signature and (2) network interference. The good news is that there is now enough recursive side validation that we have probably gotten the level of misconfiguration down to tolerable levels. The data I have seen suggests small amounts, but probably of less popular domains. This leaves us with interference. Obviously some interference is due to actual attack or other deliberate DNS manipulation spoofing results for captive portal detection, but some of it is due to various kinds of network problems, such as intermediaries of various kinds who don't properly forward or filter out unknown DNS record types such as the ones needed to make DNSSEC work. Note that these issues are not as severe for large recursive resolvers, which generally have a clear path to the Internet and so don't need to worry about intermediaries breaking them. Data is pretty thin on the ground here, with the last published information from at least as old as 2015, where Adam Langley reported:

Some years ago now, Chrome did an experiment where we would lookup a TXT record that we knew existed when we knew the Internet connection was working. At the time, some 4–5% of users couldn't lookup that record; we assume because the network wasn't transparent to non-standard DNS resource types.

This was a long time ago and I and others have actually been trying to gather some more recent data, but it's not encouraging and even a very small increased failure rate (significantly below 1%) is enough to be problematic, because effectively you'll be breaking that fraction of your users.[11]

This is especially true when--as is the case here--the user value is not particularly high (the second main reason client's don't validate). From the perspective of the client--especially something like a browser or an OS--the most important information it is getting from the DNS is the IP address corresponding to the domain it is trying to reach and this information turns out not to be that security critical. First, if you are using an encrypted protocol like HTTPS--which something like 80% of Web page loads are, then even if an attacker manages to change DNS to point you to the wrong server, they will not be able to impersonate the right server. Of course, even if you are using encryption, an attacker might be able to interfere with your DNS to redirect your traffic as part of a DoS attack on some other server (as noted above, they can also mount a DoS attack on you, DNSSEC or otherwise), but this isn't anywhere as near as bad as intercepting your traffic.

Second, even in cases where you aren't using encryption or you are using some kind of opportunistic encryption. It's not clear how valuable having the right IP is. As a practical matter, if an attacker is able to interfere with DNS traffic between you and the resolver--or they are the resolver--then it is quite likely that they can also attack your application traffic directly, which means that they can divert your traffic to their server even if you do get the correct IP address through DNS, so DNSSEC doesn't help much here either.

The outlook for deployment #

At the end of the day, DNSSEC deployment is a collective action problem:

-

Because a relatively small number of domains are signed and the data that isn't that important, resolvers--especially clients--have a relatively low level of incentive to deploy DNSSEC validation, especially when stacked up against the potential cost of high levels of breakage for users.

-

Because not that many resolutions are validated and so few clients validate, the incentive for domain operators to sign their domains is relatively low. Not only does it come at a nontrivial risk of breakage if things are misconfigured, there are a number of additional operational costs. (Chung et al. discuss a number of these in a 2017 paper which focuses on the low level of support for DNSSEC by registrars, who are actually responsible for registering domains.) Moreover, the domain doesn't get a lot of benefit from being signed: if it wants real security it has to mandate HTTPS or the like anyway.

Moreover these two reasons interlock: as long as one side doesn't move the other side has a low incentive to move either and we're stuck in a low deployment equilibrium.

Next Up: DANE #

Because DNSSEC deployment is so low on both client and server, it's also impractical to design new features which depend on DNSSEC. For example, it would be nice to have a system which allowed domains to advertise keys to be used for non-TLS transactions (e.g., to sign vaccine passports). This is something you could do with the DNS, but obviously it needs to be secure and asking everyone to install DNSSEC would be impractical, so instead we get hacks like having the key served in a specific location on an HTTPS secured site. There are quite a few applications which would be much easier if we have DNSSEC but are not individually enough to motivate DNSSEC deployment and instead get done in less elegant ways that don't require collective action.

Next up, I'll talk about probably the most serious attempt to add such an application to the DNS: DNS Authentication of Named Entities (DANE) which uses the DNS to advertise TLS keys.

Thomas Ptacek goes into this in some detail in his post Against DNSSEC. ↩︎

It's not exactly clear to me what the security properties of this are. In principle, if you have two keys which should be used to sign the records then you can make the signature as strong as the strongest one, not the weakest one, but it's somewhat subtle to get this right. ↩︎

It appears that this is another backward compatibility issue in that not all of the existing resolvers supported automatically updating to the new keys, and so you couldn't be confident that they would be universally accepted. ↩︎

It's possible to have what's called a "technically constrained" CA which can only sign specific domains, but many CAs are not so constrained, as it makes them much less useful. ↩︎

The CAA Record which tells CAs not to issue certificates unless they are listed in the record and Certificate Transparency, which publishes all existing certificates so that it's possible to detect misissuance. ↩︎

As I understand it, this key is just used to sign the keys that the child uses to sign the domain, but we can ignore this here. ↩︎

Technically, it indicates that there is no DS record for the child, which tells the client that the child has no key and is not using DNSSEC. ↩︎

It's possible to have little islands that are signed, but then you need some way to disseminate their keys, which undercuts the whole system. ↩︎

The RFCs also include an "indeterminate" state, but this seems to be basically the same as "insecure" ↩︎

It's actually not quite clear to me why they don't just publish this data; I suspect it's viewed as somehow proprietary. ↩︎

Sometimes people will propose that clients try to probe and see if the local network passes DNSSEC records correctly and only validate if so, but that just lets the local attacker disable validation by tampering with the probe. ↩︎