Privacy Preserving Measurement 2: Anonymized Data Collection

Posted by ekr on 10 Oct 2021

In part I of this series, we discussed the conventional obvious way of taking measurements, which is to say collecting a bunch of data and analyzing it locally. This is a fine practice when the data itself isn't sensitive (e.g., outdoor temperature readings from your own sensors), but is less good when you're collecting data about people that they might consider sensitive (and any data value probably has someone who considers it sensitive). It's better to have some technical mechanism which protects user data.

For some measurements, you can simply not collect identifying data. For instance, if you have a table at a local park doing a survey, you can just not ask people for their names. In many cases, however, things are more complicated. One important example is measurements taken from end-user devices (e.g., on-line surveys, client-side telemetry, etc.) Because of the way the Internet works, these reports naturally have the IP address associated with them and in many cases that can be used to map back to the user's identity. Even in cases where the software isn't running on the subject's machine, there can still be risks. For instance, if you have someone going door to door to collect information, the time of the report plus the route the person takes can be used to infer approximately which report corresponds to which subject.

The most natural technical mechanism to address these issues is to collect user data but anonymize it. The basic idea behind anonymization is to separate the data being collected from the identity of the user that is being collected from so that you can work on the data without knowing anything else about the user.

Central Anonymization #

The simplest thing to do is just to collect all the data centrally and then strip off the identifying information and then (hopefully) discard the raw data. So you start with a table like this with names:

| Name | Gender | Height (cm) | Salary ($) |

| John Smith | M | 160cm | 100000 |

| Jane Doe | M | 162 | 111005 |

| Bob Smith | F | 155 | 95000 |

| ... |

and end up with a table like this:

| Id | Gender | Height (cm) | Salary ($) |

| 1234 | M | 160cm | 100000 |

| 5678 | M | 162 | 111005 |

| 910A | F | 155 | 95000 |

| ... |

In this table, I've replaced the names with identifiers; you don't need to do this but it's convenient to have some way to refer to each record that isn't just the row number in the table. Obviously, you have to select the identifier in such a way that it doesn't leak user identity; see below for more on this.

At some level, this is just a policy mechanism and from the subject's perspective depends on trusting the data collector to actually delete the raw data, but it's significantly better than nothing. First, if the data collector is behaving as advertised, this prevents retrospective policy changes where your data is collected under one regime and then the data collector decides to use the data in a different way than they said they would. Second, it's possible to have an independent audit that the data collector is behaving as advertised, at least at one point in time. With that said, it's obviously better to have technical controls that don't depend on the data collector behaving correctly, even at the initial point of data collection.

Anonymizing Proxies #

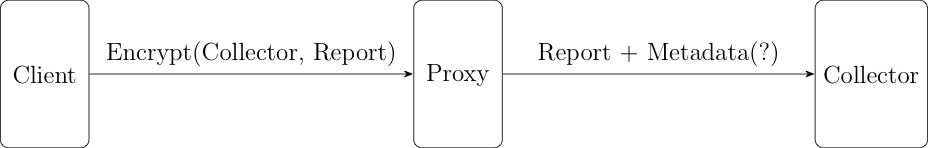

The typical solution is to have an anonymizing proxy which removes identifying information from reports. Assume you have some piece of software (the "client") which is collecting the data. That client might be being operated directly by the subject of the measurement or by some sort of field agent doing the measurement (obviously the former is better). In either case, the client has to be trusted (see here for more on this. When the data is initially collected, the client encrypts it for the data collector using some sort of public key encryption scheme[1] and then sends it to some proxy, as shown below:

The proxy strips off whatever identifying information (e.g., the IP address) was originally associated with the report, thus preventing it from being available to the collector. This leaves the data collector with the same kind of data it would have had in the previous example.

An anonymizing proxy is a good way to implement centralized anonymization, but it's better if it's run by some sort of trusted third party. In that case, the identity of the subject is protected as long as the proxy and the data collector don't collude. You can see this intuitively by realizing that the subject's identity and their reported data are never available to the same entity: the proxy just sees the identity and the encrypted report and the collector sees the report (in both encrypted and plaintext form) but never has the identity.[2]

Note that in the diagram above, the proxy also attaches some metadata to the submission. This is data added by the proxy rather than by the client. For instance, the proxy might indicate the rough geographic location of the client as derived from the client's IP address; this allows for geographic segmentation without revealing the client's entire identity. Additional metadata isn't necessary but it can be convenient in some cases.

Attacks on Anonymization #

Anonymization is a good start but unless done very carefully it can yield significantly less privacy than expected.

High-dimensional data #

The first problem is that if you have enough individual data values it's often possible to successfully narrow down someone's identity even if those individual values don't seem that identifying (this is effectively the same problem as Web browser fingerprinting). For example, suppose you have a data set where you collect the age, gender, and nationality for every member of a household, as well as the census tract. Census tracts contain a few thousand people--maybe 1-2 thousand households--so any given combination of the above demographic variables is likely to contain a fairly small number of households. This isn't necessarily a problem if the data is boring, but what if the data set also contains something more sensitive, like household income? Now someone who has access to the data set can look up people's incomes based on (semi)publicly known demographic information. This attack is called de-anonymization and is a generic problem with this kind of high-dimensional data set. There have been a number of high-profile cases where anonymized data sets turned out to be de-anonymizable, including medical records (by Latanya Sweeney) and Netflix viewing histories (by Narayanan and Shmatikov).

There are a number of potential defenses against this kind of de-anonymization--I'll be talking about adding random noise for differential privacy in a future post--but one obvious thing to do is to disaggregate the data set so that not all the information is available together. For instance, maybe we don't need the ages and nationalities of everyone in the household in order to to ask questions about people's income, so we might have two submissions:

- census tract, household size + household income

- census tract, age, gender, and nationality of everyone in the household

This isn't perfect because there are going to be some edge cases (there might only be one household with 9 people in it) but it will substantially increase privacy.[3] In order for it to work, however, it's absolutely critical that the disaggregated data values can't be relinked. For instance, you can't issue the same pseudonymous identifier to each submission for the same subject or you've just re-linked the submissions you de-linked by disaggregating them.

The second problem with disaggregation is that it reduces your flexibility: if you have all the data in one table then it's easier to ask new questions, but if it's disaggregated, then that becomes harder. For instance, if we disaggregate household income from the demographics of the individual participants, then we can no longer ask if there is an influence of nationality on household income. This isn't ideal because a lot of the value of just collecting anonymized data is to preserve this kind of flexibility, but unfortunately there's no really great way around it with this kind of system.

Time-based correlation and shuffling #

If you disaggregate the data for a given subject into multiple submissions as discussed above but you then transmit them right after the other then the value of the disaggregation goes down dramatically: the server sees a stream of submissions come in and even if they are mixed a little bit, it's usually pretty easy to put them back together by lining up the overlapping values (census tract + household size). It may be imperfect but it's likely to be pretty coarse.

It's of course possible for the client to shuffle the submissions locally by waiting a random time between submissions, but it's easier if the proxy does it. There are a number of possible shuffling strategies with various tradeoffs of timeliness and privacy. Tom Ritter has a good overview of this kind of technique for anonymous messaging, where it's called a mix networks.

Identifier selection #

As noted above, it's convenient to add a pseudonymous identifier to each anonymized submission, but some care needs to be taken in generating identifiers. Ideally, the identifier would be generated after anonymization, because then you can have high confidence that it doesn't include any extra information that the data collector doesn't have already. However, in some cases that's undesirable. For instance, you might want to be able to connect multiple submissions by the same client over time. The best way to do this is to generate the identifiers randomly, because again this gives you high confidence you aren't leaking information.[4].

In general, you really don't want the identifier to depend on the client identity in any way because that's an opportunity for compromise. In particular, hashing the client's true identity usually does not provide protection against reversing the identifier unless the true identity is itself very high entropy (i.e., there are so many possible values that it is infeasible to try them all). The problem is that if the hash function is public knowledge it's possible to just try all the input identities until one matches. This is a mistake that gets made over and over with e-mail addresses.

A less bad but still not great option is for the proxy to compute some sort of keyed pseudorandom function over the identifier with the key being known only to the proxy. This doesn't have the same problem of the data collector exhaustively searching the identifier space but it's still possible for the proxy to do so if it is later compromised. In general, if you have a system which requires the proxy to assign unique user identifiers to submitted data, it's probably worth rethinking your design.

Proxy Implementations #

Generic Proxies #

There are already a number of generic proxying systems that people use for private Internet access but which can also be used for anonymized data collection. This includes both IP-level Virtual Private Networks (VPNs) and Application-level proxy networks like Firefox Private Network, iCloud Private Relay, or Tor. Private Relay and Tor have the advantage that they include multiple hops so you need to extend even less trust to any individual entity. Although these approaches are useful, because they are generic they require some care to use successfully.

As a concrete example, if you use a generic proxy then the proxy can't shuffle your data because your interaction with the server is interactive. Thus, the client has to do any shuffling required which means multiple connections. This comes at a performance and bandwidth cost. However, even if you do, things can go wrong. For instance, the client is most likely connecting to the server using TLS, but if it uses TLS session resumption then there is a risk that the server can correlate multiple connections via the TLS session ID/ticket. A related problem is that if the client is also making non-anonymous connections to the server these might be linkable to its submitted data.

There are also some performance issues with setting up generic connections for a single submission (for instance, the proxy can't share one connection to the server), though those aren't necessarily prohibitive.

HTTP-Level Proxies #

The IETF is currently in the process of standardizing an HTTP-level proxy system called Oblivious HTTP Application Intermediation[5]. Instead of being generic system, it's specifically designed for lightweight submission of individual data. The client is configured with the server's key and just encrypts a single HTTP message for the server using Hybrid Public Key Encryption (HPKE). These can all be multiplexed over the same server connection and don't have any linkage identifiers. In principle, the proxy could also shuffle the incoming messages as well to prevent time-based correlation, though that's not currently in the specification.

Enclaves #

Bittau et al. (at Google/Google Brain) proposed a system called PROCHLO which is essentially an anonymizing proxy built into an SGX enclave. Briefly, an enclave is a mechanism for having a sealed off section of a microprocessor which (1) is not directly accessible from the rest of the processor and (2) is able to attest to what software is running on it. The idea here is that the proxy runs on the enclave and therefore the client can be sure that it is handling its submissions as advertised.[6]

The cool thing about PROCHLO is that it's not supposed to need a trusted third party in the loop: because the enclave guarantees the software running on it, the data collector can just run the whole thing in their own data center with the client checking the attestation on the proxy. This is a good idea in theory, but unfortunately there have been a number of papers attacking SGX, so in practice it's quite unclear whether this kind of enclave can be made secure against an attacker who has control--especially physical control--of the computer it's running on, so at least for now I'd have more confidence in a proxy actually run by a third party (though it wouldn't hurt to have it running in an enclave).

Next Up #

Anonymizing proxies are a useful technique that have the virtue of being both lightweight and easy to understand. In many cases they are all you need, but they also require some care to use properly and this can often give you either less flexibility or less privacy than you would naively hope. Next up, I'll be talking about some fancy cryptographic techniques that have the potential to offer a better set of tradeoffs in some settings.

You need public key because every subject has to have the same key or this lets you learn information about which client is which. ↩︎

Note that to really guarantee this, you also need the traffic between the client and the proxy to be encrypted, otherwise a sufficiently capable collector (i.e., one who could see the incoming traffic to the proxy) could correlate the incoming and outgoing reports. It's also important that this encryption be forward secret so that subsequent compromise or cheating by the proxy can't re-link the submissions and their metadata. ↩︎

Analyzing the extent to which it does is a nontrivial exercise. ↩︎

Technical note: if you have disaggregated submissions, perhaps with keyed pseudorandom function of the submission type ↩︎

Yes, we started with the acronym OHAI and worked backwards. ↩︎

The attestation is provided using a key burned into the processor, so really you're trusting Intel. ↩︎