Defending against Bluetooth tracker abuse: it’s complicated

Building a kinder, gentler panopticon

Posted by ekr on 08 May 2023

Bluetooth-based tracking tags like AirTags and Tiles are fantastically useful for finding lost stuff like your keys, your bike, or your cat. Unfortunately, they are a dual use technology which is also easy to use for surreptitiously tracking other people. This isn't a complicated attack to mount: you get a tracking tag and pair it with your own phone, plant it on your victim, and then use the find my stuff feature to monitor their location. This unpleasant fact isn't news: there have been concerns about misuse of these technologies for years, especially after the release of AirTags (see my earlier post for some initial thoughts).

On Tuesday Google and Apple published a set of guidelines for how trackers should behave to reduce the risk of unwanted tracking. This post takes a look at that document and the bigger problem space.

Background: Bluetooth Trackers #

Because these tracking systems are non-interoperable, they don't necessarily all work the same way. However, Apple provides some detail about how the system works, and back in 2021 Heinrich, Stute, Kornhuber, and Hollick reverse engineered the system and published a paper in PoPETS describing how it works as well as some vulnerabilities.

The obvious design for this kind of system would be to just have each tag have a single fixed identifier which it broadcast periodically over Bluetooth Low Energy (BLE). As a practical matter, the tag doesn't actually broadcast unless it's out of range of one of the devices its owner has paired it with; if it's in range, then the owner device can find it directly. Whenever the tag was within range of a participating device (e.g., a phone), that phone would then upload the device tag and its own position to some central server. When you lost your device, you would then contact that server and request its last known location, as shown in the diagram below:

![]()

This system has some obvious security and privacy issues:

-

The service can track the position of any tag (and in fact all tags) just by looking at the database.

-

In fact, anyone can track a tag if they know the identifier, so if you see it once, you can just query the database.

-

Even without access to the database, an attacker can reidentify a given device. For instance, if you had a receiver at the entrance to a store, you could see when the same person came by again (this is a similar set of issues to those with license plates.

The second and third attacks can be addressed by just having a rotating identifier. I.e., each tag $i$ has a secret value $SK_i$ which it shares with its owner at the time of pairing with the device. Instead of broadcasting $SK_i$ directly, it uses it as the seed for a pseudorandom function (PRF) to create a rotating identifier $ID_{i,t}$ where $t$ is the current time and broadcasts that instead. Each identifier will be used for a fixed time (say 15 minutes) and then the tag generates a new identifier and broadcasts that. The device owner knows $SK_i$ and can use it to generate $ID_{i,t}$ so it can still query the central service just by asking for the IDs for recent times, but someone who just observes a single ID can't query the service for the locations of other IDs for the same tag (and of course they already know the location at the time of observation).

Rotating IDs #

This also partly solves the problem of the service tracking the tag, because it also cannot link up multiple identifiers, so all it has is a set of locations. However, if there are comparatively few tags then the service can infer people's behavior just by looking at the unlinked locations. E.g, if I see two IDs on Highway 101 traveling in opposite directions (inferred from the lane they are in) and them some other ID getting off on a Southbound exit, I can infer that there was a single device that was going South and then exited, but it's less information. In addition, when someone queries for the location of their tag, then the service provider gets the IDs for a range of time periods, which it knows all correspond to the same device, and can then link up the motion of the tag during that time range.

Apple's design (the best documented) addresses this by having the locations where the tags are detected encrypted to the device owner. This works similarly to the rotating ID system except that instead of generating a rotating ID, the tag generates a rotating private/public key pair: $(Priv_{i,t}, Pub_{i,t})$. The tag broadcast $Pub_{i,t}$ just as it would the ID, but then when a device sees the broadcast, it uploads the location encrypted under that public key. When the device owner wants to find the tag, it queries the server using the public key[1] (just as it would have before with the tag) and gets the encrypted value. Because it shared $SK_i$ with the tag, it can generate $Priv_{i,t}$ and can decrypt the encrypted location, as shown in the figure below:

![]()

Privacy Properties #

This system has significantly improved privacy properties. As with a simple rotating identifier, an attacker can't track a tag using multiple observations over an extended period. And because the reports are encrypted, the service provider is not able to directly determine the actual location of the device. However, that doesn't mean that the service provider doesn't learn anything. In particular:

-

If two owners both query the location of lost tags which are reported by the same device, than it allows the service to infer that the owners were at one point in the same location (this attack is reported in the Heinrich et al. paper).

-

If two devices both report the location of the same tag then the provider can infer that those devices were in the same location at the time of the report.

-

If the service provider has an independent way of learning the location of a reporting device—for instance by IP location or because the owner uses some location-based service—and then the owner queries for its location, the service gets to learn information about the owner's movements (because that is where they probably lost the tag). This attack is exacerbated by the fact that you want to query multiple keys (one for each time range), so the service might learn multiple locations for the same tag and be able to link them.

The root cause of all of these issues[2] is that the service gets to learn the identity of reporting devices when they make reports, as well as potentially of the device owner when they query for location. This part of Apple's design isn't very clearly documented, but presumably the rationale for identifying the endpoints is to prevent abuse (e.g., forged location reports) by requiring that they be genuine Apple devices (see Section 9.4 of Heinrich et al.). It should be possible to address this issue using standard anonymity techniques such as Oblivious HTTP,[3] though it doesn't appear Apple does that.

Unwanted Tracking #

The privacy mechanisms described above are about preventing other people from learning the location of your tags, but the way you use a system like this to track someone else is to attach one of your tags to something of theirs and then query the system to see where your tag is. This is a much harder problem to solve because the whole point of the system is that the tag isn't attached to you (that's why you're looking for it!) and there's no real technical way to distinguish the case where I accidentally left my keys in your car from the one where I maliciously stuck an AirTag to your car to track you.

Instead, the countermeasures that Apple and others have designed seem to center around making this situation detectable. Specifically:

-

If AirTags are away from their owners for "an extended period of time" they make a sound when moved.

-

If your iOS device detects that an AirTag that doesn't belong to you moving with you, it will notify you on the device and then you can try to find it and figure out what's going on.

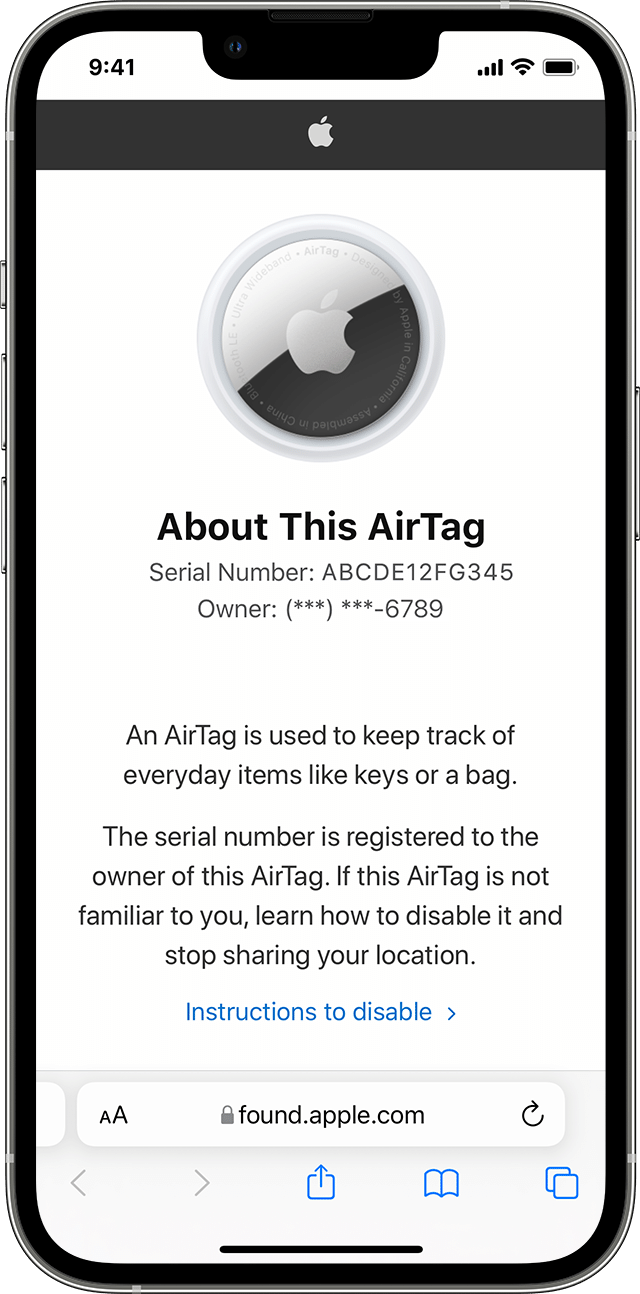

Once you have detected a tag that appears to be following you, AirTags also include a feature that lets you partially identify the owner of the tag, as long as you can physically access the tag.

[Source: Apple]

My personal experience is that these features are both fairly hit and miss. In terms of the sound notification, the speaker in AirTags is pretty quiet and the noise is kind of intermittent. We use AirTags to keep track of our cats, but it's paired to my wife's phone not mine. After she had been out of town for several days, I finally noticed the AirTags making sound and took them off the cat's collars, but the first time this happened I probably heard the sound about three or four times—and who knows how many times I didn't hear it—before I figured out what it was. We're all constantly surrounded by stuff beeping so it's easy to get habituated to it.

Similarly, I've had the "someone is moving with you" trigger a number of times—most recently Saturday—such as when someone accidentally left their AirPods around, but that also takes a while to trigger and is easy to ignore. I imagine both of these features would work a lot better if you were really worried about being tracked, but at least in my experience there are a lot of false positives, which makes the whole system less useful than one might like.

The Apple/Google Draft #

On Tuesday, Apple and Google published a document describing guidelines for how trackers ought to behave in order to make unwanted tracking easier to detect.

Today Apple and Google jointly submitted a proposed industry specification to help combat the misuse of Bluetooth location-tracking devices for unwanted tracking. The first-of-its-kind specification will allow Bluetooth location-tracking devices to be compatible with unauthorized tracking detection and alerts across iOS and Android platforms. Samsung, Tile, Chipolo, eufy Security, and Pebblebee have expressed support for the draft specification, which offers best practices and instructions for manufacturers, should they choose to build these capabilities into their products.

Mostly this document provides detailed specifications of the behaviors I've described informally above. For instance, here's the portion describing how the audible alerts should work:

After T_(SEPARATED_UT_TIMEOUT) in separated state, the accessory MUST

enable the motion detector to detect any motion within

T_(SEPARATED_UT_SAMPLING_RATE1).

If motion is not detected within the T_(SEPARATED_UT_SAMPLING_RATE1)

period, the accessory MUST stay in this state until it exits

separated state.

If motion is detected within the T_(SEPARATED_UT_SAMPLING_RATE1) the

accessory MUST play a sound. After first motion is detected, the

movement detection period is decreased to

T_(SEPARATED_UT_SAMPLING_RATE2). The accessory MUST continue to play

a sound for every detected motion. The accessory SHALL disable the

motion detector for T_(SEPARATED_UT_BACKOFF) under either of the

following conditions:

* Motion has been detected for 20 seconds at

T_(SEPARATED_UT_SAMPLING_RATE2) periods.

* Ten sounds are played.

If the accessory is still in separated state at the end of

T_(SEPARATED_UT_BACKOFF), the UT behavior MUST restart.

Not a full specification #

What this document is not, however, is a complete specification of a tracking system. In particular, it doesn't cover any of the fancy (well fancy-ish) cryptography I described above. Instead, it describes a Bluetooth container for the messages, with the following contents:

| Bytes | Description | Requirement |

|---|---|---|

| 0-5 | MAC address | REQUIRED |

| 6-8 | Flags TLV; length = 1 byte, type = 1 byte, value = 1 byte | OPTIONAL |

| 9-12 | Service data TLV; length = 1 byte, type = 1 byte, value = 2 bytes (TBD value) | REQUIRED |

| 13 | Protocol ID (TBD value) | REQUIRED |

| 14 | Near-owner bit (1 bit) + reserved (7 bits) | REQUIRED |

| 15-36 | Proprietary company payload data | OPTIONAL |

As far as I can tell, the cryptographic pieces would

go in the "proprietary company payload data" portion, though

it's actually not clear to me precisely how this works in

the case of AirTags. As Heinrich et al. describe, the

BLE payload is quite small (31 bytes for the ADV_NONCONN_ID PDU) but the BlueTooth

standard requires a 4-byte header for manufacturer-specific

data, so Apple had to do do some tricky

engineering to get the P-224 public key (28 bytes) into

the remaining 27 bytes of the packt (they repurpose part of

the MAC address to do this).

It's not quite clear to me how Apple plans to stuff the

public key into the 21 "proprietary payload" bytes, but

presumably they have some plan in mind. Any readers who

know how this is supposed to work should reach out.

Maybe they plan to send two packets?

The key point here is that this isn't enough of a specification to provide interoperability between systems. For instance, it wouldn't tell you enough to build your own tags which worked with Apple's tracking network; it's just supposed to be enough to tell you how to build your tracking tags so that they are detectable. Note the careful phrasing here: the document doesn't tell you how to detect tracking tags, it just tells you how to build tags which are trackable and you are left to infer how to detect them.

Detecting Tracking Tags #

With that said, this document does help explain something confusing about the description I provided above, namely how devices are to detect that a tag is following them if the identifier it broadcasts changes every 15 minutes. The answer appears to be that the BLE address doesn't change.

An accessory SHALL rotate its resolvable and private address on any transition from near-owner state to separated state as well as any transition from separated state to near-owner state.

When in near-owner state, the accessory SHALL rotate its resolvable and private address every 15 minutes. This is a privacy consideration to deter tracking of the accessory by non-owners when it is in physical proximity to the owner.

When in a separated state, the accessory SHALL rotate its resolvable and private address every 24 hours. This duration allows a platform's unwanted tracking algorithms to detect that the same accessory is in proximity for some period of time, when the owner is not in physical proximity.

The "resolvable" address refers to the BLE network address (MAC address). In other words, when in the separated state, the tag sends out beacon packets where the MAC address is constant for 24 hours even if the public key rotates every 15 minutes (and remember that the public key encryption piece isn't specified here). So presumably what you are supposed to do as a device is look for any tag (identified by MAC address) that has been following you for a while and if so alert the user. But how long a period is "a while". Who knows? That's up to you.

Why not just rotate the address every 24 hours all the time? Two reasons: (1) it prevents triggering the detection algorithm as long as it has a trigger at more than 15 minutes and (2) it make the tag less trackable in cases where it is traveling with its owner (see rotating IDs above. There is also a "near-owner" bit in the advertisement that says that the tag is near its owner and that detecting devices shouldn't treat it as tracking them.

Once a tag is detected, it is also possible to connect to it directly and query its information (manufacturer, product type, etc.), as well as to cause it to play a sound. It is also possible to retrieve the device serial number as long as you can demonstrate close proximity, either via an NFC connection or some user action on the device itself (pressing a button, etc.)

The Broader Threat Model #

My bigger concern is that this document seems be limited to a fairly narrow threat model, which is to say tracking by naive attackers who take an off-the-shelf tag and attach it to their victim. The Apple/Google document describes a set of behaviors that companies ought to build into their trackers to mitigate this threat, but unfortunately, this isn't the only threat.

It's already possible to buy relatively compact GPS trackers that don't depend on using Bluetooth to talk to other devices (see this older post for more on this topic.). However, these trackers are expensive (about $300, plus a subscription), have battery lifetimes measured in days or (at best weeks), and are several centimeters across, so are somewhat hard to conceal. By contrast, tracking tags like Tiles or AirTags have a combination of features that makes them more attractive for surveillance.

- They are compact (thus easy to hide)

- They are cheap (thus easy to obtain)

- They have long battery lifetimes (and thus are suitable for long-term surveillance)

These features are made possible by the existence of a widespread network of devices (phones, etc.) which can report the position of a lost tag. That network allows the use of much cheaper and energy efficient technologies than a tracker like the Garmin inReach, which needs both a GPS receiver and a satellite transmitter. It's that network that creates the risk, not the tracking tags themselves. Specifically, if the attacker can obtain a tag which can successfully be located with the tracking network but which doesn't conform to the behaviors specified in this document, then the detection mechanisms that this document anticipates will be less effective if not completely useless.

There are at least two possible ways for an attacker to obtain such a tag:

- Modifying an existing tag.

- The stock tags made by each manufacturer are cheap and generally reasonably well-engineered, so it's convenient for the attacker if they can just buy them and disable the anti-tracking features. For example, in his thorough AirTag teardown, Adam Catley observes that it's possible to disable the speaker in an AirTag and suggests that the tag be modified to check to see if the speaker is actually making noise. Depending on the design of the tag, it might be possible to rewrite the firmware to violate the requirements in this document, for instance by rotating the MAC address frequently to evade detection (oddly: this document says "The accessory SHOULD have firmware that is updatable by the owner", which is the opposite of what you want here.)

- Building an entirely new tag.

- Even if the stock tags are hard to modify, once it's public information how these devices are built it's possible to make your own tags that don't have any anti-tracking features at all. In fact, this already exists in the form of OpenHaystack built by the same team as that published the PoPETS paper I've been relying on for most of this analysis. OpenHaystack is designed to run on commodity hobby hardware like the BBC micro:bit which is quite a bit bigger than an AirTag but obviously it would be possible for someone to engineer something compact and cheap, perhaps using the AirTag design as a starting point. Note that it doesn't really help that the specific design of any individual system is secret: there are tens of millions of these devices out there, and it just takes one person to reverse engineer a tag and publish the results.

Either of these attacks requires more sophistication than just buying an AirTag through Amazon, but the would-be stalker doesn't have to have that sophistication themselves; they just need some third party to start making and selling tags that are suitable for surveillance. If such devices become widely available, then the countermeasures Apple and Google are proposing will become much less effective. There's already a market for "stalking apps," so this seems like a real risk.

What you really want here is for it not to be possible to make a tag which participates in the tracking network without implementing the specified anti-tracking behaviors. This is a hard job under any circumstances (though see some handwaving ideas below), but is made much harder by specifying a design in which tracking detection pieces are specified at one level (the BLE layer) and the official "find my device" functionality is implemented in a proprietary layer that sits on top of that. That makes it very easy for an attacker to build their own tag that complies with the (reverse engineered) proprietary pieces but then violates the rules at the BLE layer. I can understand why Apple and Google, who each presumably have some proprietary design, want to avoid standardizing that piece, but the result is that the problem of detecting unwanted tracking is much harder.

Attestation #

The most straightforward approach is if we assume that "official" devices behave correctly and then have some mechanism for detecting official devices. The standard approach here is to have what's called an "attestation" mechanism in which each legitimate device has some secret embedded by the manufacturer which can be used to prove that it's legitimate (e.g., by signing something). See ([here](/posts/verifying-software for more on this.) Devices would then require tags to prove they were legitimate before reporting their location to the network. Of course, this secret has to be embedded in tamper-resistant hardware to prevent an attacker stealing the secret and making their own fake devices.

Actually building a system like this in such a way that the attestation doesn't itself become a tracking vector (e.g., by having each device have a single attestation key which can then be tracked) is challenging cryptographically (this is also an issue with the WebAuthn public key authentication system), but there are some approaches that sort of work, or at least are somewhat better than the naive design.[4]

However, even if you know for sure that you are talking to a legitimate device, that doesn't necessarily tell you that it's acting as its supposed to. As a simple example, you might have a device which sent the right BLE data but whose speaker had been disabled (or which was wrapped in sound-absorbing material). A fancier attacker might take a legitimate tag and proxy its signals to the device by putting it in a radio-absorbing case and then receiving and retransmitting whatever signals it sent, as shown below:

![]()

In this example, the tag is in the separated state, so it is supposed to keep a constant MAC address (though presumably still rotate its public key). However, the attacker captures this message and rewrites the MAC address so it looks like it a different device, fooling the detection algorithm.

This kind of cut-and-paste attack is possible to address by having the proprietary pieces that the network relies on enforce the correctness of the anti-tracking pieces (e.g., by signing the expected MAC address), but in order for this to work, they need to be aware of each other, which, as I said, isn't specified anywhere in this document. The point here is that successfully designing anti-tracking mechanisms requires analyzing the system as a whole, not just looking at one piece at a time. In particular, it's necessary to understand how the as-designed functionality works in order to build anti-tracking countermeasures which can't be separated from that functionality. And of course, in the case of the audible alerts, in some cases that may not be possible to do.

Worse yet, we already have a giant installed base of devices which don't have any kind of attestation, and presumably vendors want them to continue to work. This means that even if we were to deploy a system with this kind of attestation today, attackers could still exploit it by pretending to be one of those old devices.

The Status of this Specification #

This is slightly off topic from the technical content of this post, but I think it's important to observe that this isn't an IETF specification. There has been some confusion on this point, in part due to Apple's misleading PR statement:

The specification has been submitted as an Internet-Draft via the Internet Engineering Task Force (IETF), a leading standards development organization. Interested parties are invited and encouraged to review and comment over the next three months. Following the comment period, Apple and Google will partner to address feedback, and will release a production implementation of the specification for unwanted tracking alerts by the end of 2023 that will then be supported in future versions of iOS and Android.

Whats an RFC? #

RFC stands for "Request For Comments", and dates from the prehistory of the Internet when there wasn't a real standards process and people would just publish memos describing protocols. The IETF loves its traditions and "RFC" is now an important brand (so much so that other organizations such as the Rust Project now publish standards "RFCs" even though they have no connection to the IETF process. To make matters worse, there are also RFCs published in the same series as IETF RFCs that aren't standards, including those published in what's called the Independent Stream, which don't have any standards status and are just approved by a single Independent Submissions Editor.

That's pretty carefully worded, but it certainly gives the impression that Apple and Google want to standardize this work. The quote from Erica Olsen from the National Network to End Domestic Violence (NNEDV) is even more explicit, referring to these as "new standards" (and of course this is in Apple's press release, so it's not like they aren't aware of the context). Of course, there are other meanings to "standard" than "document produced by some Standards Development Organization", but in this context, the best you can say about this press release is that it's misleading in a way that is very convenient for Apple and Google, who would no doubt like the protective cover of appearing to standardize something while in fact acting unilaterally to address a problem they created by acting unilaterally.

Needless to say "two big companies submit a specification, take comments for three months, and then do whatever they feel like" is not the way that the IETF standards process works. The IETF lets anyone "submit" a specification by posting an Internet-Draft (ID) which is what Apple and Google have done, but those don't have any formal status. Some IDs will be adopted by the IETF as part of the standards process and some of those will actually be standardized and become RFCs. This process takes much longer than three months and involves achieving "rough consensus" of the IETF Community, not just a few vendors. I know that this sounds like standards inside baseball, but there is an important point here. One of the functions of standards is to ensure that there is widespread review from a variety of stakeholders, who might have a different viewpoint (for instance that actual interoperability is useful, or that you need a different set of tradeoffs between privacy and functionality), but the way that that works is that you need buy-in from those stakeholders before the standards are finished.

One critique you often hear is that the standards process is too slow and that this is why industry actors need to ship first and standardize later. The three month comment period seems to reflect that attitude (it's certainly true that the IETF can't standardize anything in three months). However, the decision by Apple and Google (and others!) to ship these technologies without real public review is one reason why we now are in a situation where they are being actively misused, something people have been expressing concerns about for two years. Apple/Google could have brought this work to IETF—or some other standards body—at any point during that time, but they chose not to do so, so arguments about how the situation is now too urgent to go through a real multistakeholder process don't really move me.

I regularly work with a lot of people from Apple and Google and those companies know how to bring work to IETF when they want to. This isn't it.

Final Thoughts #

As I said two years ago, this is a classic dual-use technology. It's really convenient to be able to find your stuff when you lost it, but tracking tags just don't know whether they are attached to your stuff or other people's stuff. Trying to make it visible when you are being tracked via this method is probably about the best you can do, but it's also clear that it's a highly imperfect defense. Deploying this kind of defense is made even harder by having a large installed base of devices from multiple mutually incompatible networks, meaning that anything we do has to be backward compatible. It took us years to get into this hole; it will take a lot more than three months to get out.

[2023-05-08: Updated title.]

Actually a hash of the public key. ↩︎

Heinrich et al. also report an issue in which attacker is able to leverage temporary control of the user's device to steal $SK_i$ and afterwards can track the user. Apple has reportedly solved this by making the keys harder to learn, but this is a generically hard problem in an open system. ↩︎

The way this would work would be that the device encrypt the report as described above and then encrypt it yet again for the service. It would connect to a proxy, authenticate as a valid device, and then send the doubly-encrypted report. The proxy would then strip off the reporter's identity and send it to the service, which would remove the outer encryption layer and store it, just as before. ↩︎

Note that this would most likely not all fit into a single packet, but you could imagine that the reporting device would ask the tag to attest in a separate message before reporting its position to the service. ↩︎