ELI15: Private Information Retrieval

Let's see if I can explain this with just high school math...

Posted by ekr on 30 Aug 2022

In my post on Safe Browsing I mentioned that one possible solution to the problem of querying the Safe Browsing database is Private Information Retrieval (PIR) and then waved my hands vigorously about it being crypto magic. In this post, I'm going to attempt to explain how PIR works with as simple math as possible. You will, however, want to read the Web version of this post because there is a fair bit of math and I use LaTeX to render it with MathJax, which looks bad in the newsletter version.

The PIR Problem #

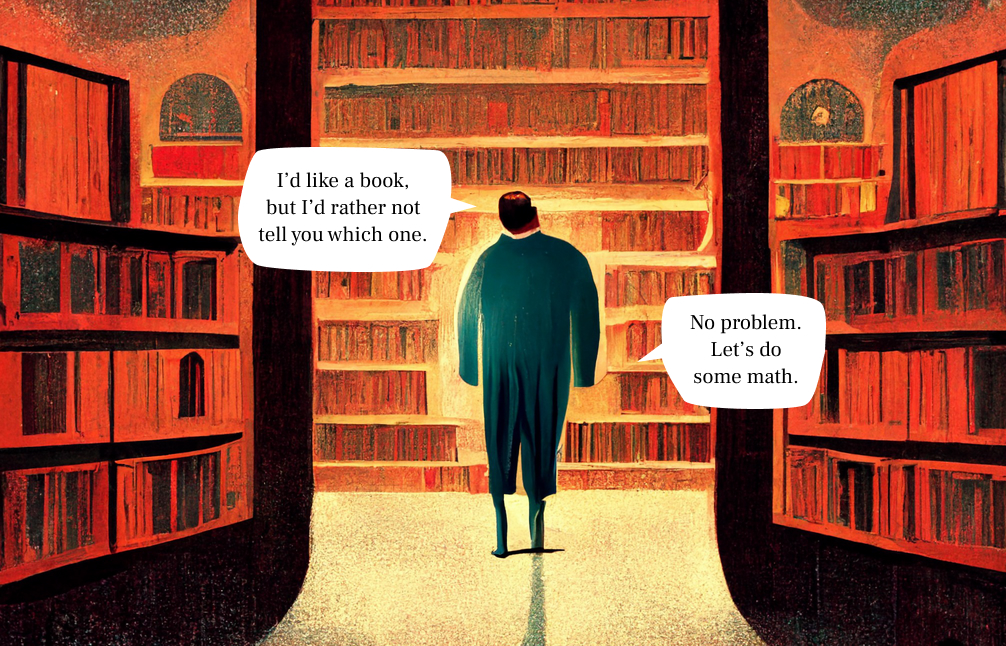

The basic version of the PIR problem looks like this:

-

You have a server with some database $\mathbb{D}$ consisting of a set of $d$ elements $D_1, D_2, D_3, ... D_d.$

-

The client wants to retrieve the $i$th element $D_i$ but doesn't want the server to know which element it retrieved.

There is an obvious trivial solution[1] in which the server sends the client the entire database and the client just looks up the value it wants to know[2] This provides privacy but at the expense of communication cost because you have to send the entire database. The challenge, then, is to build a system which has involves sending less data, has comparable privacy, and which doesn't chew up too much computational power.

There are two main flavors of PIR:

- Single server schemes

- Multiple server schemes

The single-server schemes are designed under the assumption that the server is malicious and use cryptographic mechanisms to protect against it. The multiple server schemes are designed under the assumption that some subset of the servers is non-malicious and are insecure if all the servers misbehave. In this post, I'll be talking solely about single-server PIR schemes; at some point in the future I might talk about multi-server.[3]

A Simple Insecure Solution #

The first observation to make (due to Beimel, Ishai, and Malkin) is that any single-server system must involve the server computing some function over every element in the database. Otherwise, the server could simply look at which elements were touched and learn something about which were retrieved. This tells us something about how things need to be constructed.

Let's start with a solution that's insecure but can serve as the basis for a secure solution. Take the database and arrange it in a square arrangement (a "matrix") like so:

$$ \begin{bmatrix} D_1 & D_2 & D_3 \\ D_4 & D_5 & D_6 \\ D_7 & D_8 & D_9 \\ \end{bmatrix} $$

In order to make a query, the client creates a list of numbers, that consists of all 0s except for the number corresponding to the column in the matrix that it wants to read. For instance, if it wants to read value $D_6$, it would send the list below (I'm writing this vertically for reasons which will become apparent shortly).

$$ \begin{bmatrix} 0 \\ 0 \\ 1 \end{bmatrix} $$

The server constructs its response as follows. For each row in the matrix, it then goes column by column multiplying the value in its database times the value in the same row provided by the client and adds up the values for each column in the database. This produces a list that is the same length as the client's input, where each value is constructed by multiplying the elements in the matrix times the elements in the client's input. In this case, we would then get:

$$

\begin{bmatrix}

D_1 \cdot 0 + D_2 \cdot 0 + {\color{red}D_3 \cdot 1} \\

D_4 \cdot 0 + D_5 \cdot 0 + {\color{red}D_6 \cdot 1} \\

D_7 \cdot 0 + D_8 \cdot 0 + {\color{red}D_9 \cdot 1} \\

\end{bmatrix} =

\begin{bmatrix}

D_3 \\

D_6 \\

D_9 \\

\end{bmatrix}

$$

As you can see, what's happened here is that the 0s erase the columns we're not interested and we're just left with a list of the column of interest (in this case the rightmost one), shown in read. The client can then just read out the value of interest by looking at the right row.

Those of you who have taken linear algebra will recognize this as conventional matrix multiplication, where we multiply the database times the selection vector. However, you don't need to know that in order to understand what's going on.

It's worthwhile to stop and look at the properties of this design. In the trivial solution, the server had to send $d$ values to the client, whereas in this design the client has to send $\sqrt d$ values and the server sends $\sqrt d$. With a small database like this one, this is a trivial improvement, but for a large database $2\sqrt d$ is going to be much smaller than $d$. The server has to perform $d$ computations, one for each value in the database; as noted above, this is expected. Unfortunately, this scheme is also trivially insecure in that the server learns the column (though not the row) that the client is interested in so we need something fancier. The solution lies in a technology called "homomorphic encryption".

A More Secure Solution: Homomorphic Encryption #

Partially Homomorphic Encryption #

It's been known for a very long time how to do partially homomorphic encryption. As a concrete example, consider the case where you encrypt some data by XORing it with a key, i.e.,

$$Ciphertext = Plaintext \oplus Key$$

With this system, you can have the server compute the XOR of two plaintexts $P_1$ and $P_2$, given only the encrypted form. The client sends:

$$ (C_1, C_2) = (P_1 \oplus K_1, P_2 \oplus K_2)$$

The server returns:

$$ C_1 \oplus C_2 $$

Which the client XORs with $K_1 \oplus K_2$, i.e.,

$$P_1 \oplus K_1 \oplus P2_2 \oplus K_2 \oplus K1 \oplus K_2 $$

When you cancel out the keys ($A \oplus A = 0$) you get:

$$ P_1 \oplus P_2$$

The difference between partially and fully homomorphic encryption is that with a partial homomorphic system you can compute some functions on encrypted data but not others. With a fully homomorphic system you can compute any function, whereas this system is homomorphic with respect to XOR but not (say) to multiplication. The problem of fully homomorphic encryption had been open for a long time until Craig Gentry finally showed how to do it in 2009.

The reason that our simple approach was insecure is that the server has to know which values in the client's list are 0 and which are 1, and so can easily determine which column the client wants. But what if the server could perform this computation without determining which of the client's values was 1? Kushilevitz and Ostrovsky figured out how to do this in 1997, using a technique called homomorphic encryption. A homomorphic encryption system is one in which you can operate on encrypted data without seeing the content of the data (see the sidebar for some intuition on this).

Specifically, we want a homomorphic encryption scheme which is homomorphic with respect to addition. I.e., if we have two ciphertexts $E(A)$ and $E(B)$, there is some way to compute $E(A + B)$ without knowing $A$ or $B$. All we have to do is have the client encrypt its 1s and 0s under a homomorphic system to which it knows the key, then send the encrypted versions to the server. The server can then perform the same computations as before, except with the encrypted data.

The way this works is that the client sends:

$$ \begin{bmatrix} E(0) \\ E(0) \\ E(1) \end{bmatrix} $$

The server would then compute:

$$

\begin{bmatrix}

D_1 \cdot E(0) + D_2 \cdot E(0) + {\color{red}D_3 \cdot E(1)} \\

D_4 \cdot E(0) + D_5 \cdot E(0) + {\color{red}D_6 \cdot E(1)} \\

D_7 \cdot E(0) + D_8 \cdot E(0) + {\color{red}D_9 \cdot E(1)} \\

\end{bmatrix} =

\begin{bmatrix}

E(0) + E(0) + {\color{red}E(D_3))} \\

E(0) + E(0) + {\color{red}E(D_6) }\\

E(0) + E(0) + {\color{red}E(D_9) }\\

\end{bmatrix} =

\begin{bmatrix}

E(D_3) \\

E(D_6) \\

E(D_9) \\

\end{bmatrix}

$$

The client receives this value, decrypts, and it's got the result. One thing that might be sort of confusing here is that I'm showing the server both adding, and multiplying, as in:

$$ D_1 \cdot E(0) + D_2 \cdot E(0) + D_3 \cdot E(1) $$

However, because the server is multiplying the encrypted value by a known value, it can do this just by addition, as in:

$$ E(2A) = E(A) + E(A) $$ $$ E(3A) = E(2A) + E(A) $$

So, all you need is an addition operation. There are, of course, tricks to make this faster. For instance, you can compute powers of two (1, 2, 4, 8, etc.) and then just build up the final value from those. If you want to multiply two encrypted values, e.g., $E(A) * E(B) = E(AB)$ then you need a fancier system, but that's not required here.

Of course, finding a suitable homomorphic encryption scheme is tricky because you want something that is cheap to compute and has a small ciphertext. The original K-O scheme used a fairly inefficient homomorphic encryption system and much of the work here has been in finding better systems.

Detail: Homomorphic Encryption using ElGamal #

You don't need to understand how to build a homomorphic encryption algorithm in order to understand PIR, but it's sometimes helpful to see things written out. In this section, I describe a simple well-known scheme based on the ElGamal encryption algorithm.

In the ElGamal encryption system client and server share a known value $g$. In order to receive a message, an entity (say the client) creates a random value $y$ and publishes $g^y$. In order to encrypt a message $m$ to someone, you generate your own random value $x$ and then send the pair of values:

$$g^x, g^{xy} \cdot m$$

The recipient—who recall has $x$—can then do the following computation:

- Take $g^x$ from the message and raise it to $y$ to get $(g^x)^y = g^{xy}$

- Divide the second part of the message by $g^{xy}$ to recover $m$

Note that in ordinary integer math, given $g^a$ and $g$ it's easy to compute $a$ but we're going to be doing this in a setting where that computation is hard, namely modulo some prime $p$.[4] This is called the discrete logarithm problem or just "discrete log". The intuition is that if you can compute $g^xy$ either by knowing $g^y$ and $x$ (which the sender does) or $g^x$ and $y$ (which the receiver does) but if you only know $g^y$ or $g^x$ you're stuck. Everything else is pretty much the same as normal math but just remember that part.

However, it turns out that system is homomorphic with respect to multiplication, not addition. Consider the pair of ciphertexts:

$$E(m_1) = (g^{x_1}, g^{x_1y}m_1)$$ $$E(m_2) = (g^{x_2}, g^{x_2y}m_2)$$

If we multiply the first parts and the second parts together, we get:

$$E(m_1 m_2) = E(m_1) \cdot E(m_2) = (g^{x_1 + x_2}, g^{y(x_1 + x_2)}m_1m_2)$$

You can decrypt this exactly as before to get $m_1m_2$.

However, I said above, that we wanted something that was homomorphic with respect to addition not multiplication. The trick here is that instead of encrypting message $m$ you instead encrypt $g^m$. Thus, the result becomes:

$$g^{m_1} \cdot g^{m_2} = g^{m1 + m2}$$

And you just need to take the discrete log to recover $m_1 + m_2$ But didn't I just say that taking discrete logs is hard? Basically, this works fine as long as the value to be retrieved is relatively short. So, for instance, if we restrict ourselves to retrieving a single bit, then you just need to compare against $g^0$ or $g^1$. The limit depends a bit on computational power, but it's fairly practical to retrieve 32-bit values with the right algorithms (for smaller values like 8 bits you can just build a table).

To use this system in practice, the client is just going to encrypt to itself by generating a key that it knows, but otherwise we just use this system as-is.

Complexity #

This is all pretty cool and it's better than nothing, but it's also not very efficient: in order to retrieve a single value you need to send $2\sqrt d$ values ($\sqrt d$ values in each direction) and the values themselves are relatively large (in basic ElGamal, from 512 bits to 8192 bits[5]). On the other hand, if the database is large, it's still more efficient than sending the whole database. The database size is $d$ values, so if each value is a single bit (as in the original K-O scheme), the breakeven point where you send less data than you would just by sending the database is around $512^2$ (about 260,000) entries if you are using an efficient version of ElGamal. The situation with the original K-O system was even worse.

In terms of computational complexity, the server has to compute over each database entry, so that's $d$ units of work—recall that you have to compute over each value in order to have a PIR system. The client only has to compute the $\sqrt d$ input values, then decrypt the relevant returned values and take the discrete log, so that's fairly cheap.

Improvements #

As described in the original K-O paper, it's possible to significantly improve the basic scheme by being a little clever.[6]

Reusing the Client's Vector #

The original K-O scheme was even worse than what I have presented above in that you could only extract single-bit values. This meant that if you wanted to extract multiple-bit values, you naively just repeat the protocol for each bit, so both the database size and the PIR protocol scale linearly with entry size.

This leads to an obvious improvement: say that I want to read values from a database where each entry is 8 bits rather than 1. As noted above, the client could just send 8 input vectors, but why? The client's vectors all pick out the same column in the database and they're not specific to anything on the server side.

Instead, the server can just compute its results for each of the 8 bits of the database using the same client input. The server then sends back $8 \sqrt d$ values, with the first $\sqrt d$ being for the first bit, the next $\sqrt d$ for the next bit, etc. but all computed over the same client input. This gives you a total communications complexity of:

$$ C (1 + \sqrt d + b \sqrt d) $$

Where $b$ is the number of bits to be extracted and $C$ is the size of the homomorphic encryption ciphertext. It also means you don't need multiple round trips. Of course, if you have a fancier scheme that lets you extract values that are greater than one bit, then this trick becomes less interesting. However, if you need to extract big values that make discrete log impractical (say 100 bits) then it becomes useful, because you can extract the value in pieces, each of which is easy to compute discrete log on.

Recursion #

The next optimization requires a little more cleverness. Recall that the server sends the client values corresponding to each row in the database but that the client only cares about one of the rows. Say we have a database that is consists of $d$ values and so our matrix is $\sqrt d$ on each side. The client sends $\sqrt d$ values and the server replies with $\sqrt d$ values. The client only cares about the $i$th value in the server's response, but it can't tell the server that because that would tell the server which row it was interested in.

The key insight here is that this itself is a PIR problem, with the database consisting of $\sqrt d$ values of length $C$. In the naive protocol described above, the server sends the entire database to us, but we only care about $\frac {1}{\sqrt d}$th of it. We can use the same PIR scheme to request just the pieces we care about, one at a time.

But why stop there? We can keep using the same trick! Imagine we have a really big database of $2^{48}$ entries. Then even the second level database representing $\sqrt d$ entries in the server's response is going to be quite large, which means that the PIR problem of extracting one element out of that vector is also expensive. But we can do the same thing again. It's turtles all the way down!

Further Improvements #

Even with the optimizations above, we're still left with a system which isn't very efficient, especially for smaller data sets, where it's quite a bit worse than just transferring the entire database (the advantage goes as a factor of $\sqrt N$). In the 25 years since the original Kushilevitz and Ostrovsky paper, there has been quite a bit of work in this area.

This seems to fall into a small number of buckets.

Improving the Inner Loop #

If you step back and look at the basic design of the K-O protocol, it looks like this (I'm using the linear algebra matrix multiplication notation here, but really the $\times$ just denotes that we are doing whatever our core operation is in criss-cross fashion, as before:

$$

\begin{bmatrix}

V_1 & V_2 & \color{red}{\mathbf{V_3}} \\

V_4 & V_5 & \color{red}{\mathbf{V_6}} \\

V_7 & V_8 & \color{red}{\mathbf{V_9}} \\

\end{bmatrix}

\times

\begin{bmatrix}

0 \\

0 \\

\color{red}{\mathbf{1}} \

\end{bmatrix}

\rightarrow

\begin{bmatrix}

\color{red}{\mathbf{V_3}} \\

\color{red}{\mathbf{V_6}} \\

\color{red}{\mathbf{V_9}} \\

\end{bmatrix}

$$

In other words, the input vector supplied by the client operates on each row of the database, picking out the column of interest to the client and ignoring the other values (remember that rows in the input vector correspond to columns we want to select). The server sends back each resulting row and the client reads the row of interest, ignoring the others. This basic structure holds whether the operation being performed is simple multiplication (as in our insecure example) or homomorphic encryption.

This means that the cost of the system is determined by the basic scaling properties of $2 \sqrt d$ communications cost and $d$ computational cost, but multiplied by the cost of the homomorphic encryption system. The more efficient the homomorphic encryption system is, the more efficient the whole thing will be. There has been a fair amount of work invested in finding more efficient homomorphic encryption algorithms to plug in here.

Reducing the Client's Input Vector #

There is another cute trick we can play, that's a natural extension of the techniques we have already seen. Suppose that we have a homomorphic encryption scheme that lets me:

- Add as many encrypted values as I want

- Do a single multiplication of two encrypted values

In this case, we can reduce the communication cost further, as described by Boneh, Goh, and Nissim. Instead of sending a single list of encrypted values, containing a single (encrypted) 1, the client sends a pair of lists, each containing a single (encrypted) 1. The server then computes the product of each pair of values in each list, e.g.,

$$ \begin{bmatrix} 1 \\ 0 \\ \end{bmatrix} \begin{bmatrix} 0 \\ 1 \\ \end{bmatrix} \rightarrow \begin{bmatrix} 0 & 1\\ 0 & 0 \\ \end{bmatrix} $$

We can then lay this out in a deterministic order left to right and top to bottom (though any rule will work) as a single list, like so:

$$ \begin{bmatrix} 0 \\ 1\\ 0 \\ 0 \\ \end{bmatrix} $$

This list can then be used as the input to the standard K-O protocol, and we've just reduced the total number of values the client sends from $\sqrt d$ to $\sqrt[4] d$ (the server to client communication remains unchanged). We can actually improve the situation further by changing the structure of the database to be non-square, instead having $\sqrt{3} d$ rows and $(\sqrt{3} d)^2$ columns. In this case, the client sends two input vectors, each of which are $\sqrt{3} d$ long, the server maps them onto a $(\sqrt{3} d)^2$ long vector. It does the same criss-cross trick as before, producing a result that is $\sqrt{3} d$ long and sends it to the client, for a total communications cost of about $3 \sqrt{3} d$.

Precomputation #

One interesting recent development in PIR is the design of systems which use precomputation to make the PIR process cheaper. The basic idea is that with a suitable homomorphic algorithm the server and client can perform some initial exchange, presumably involving some computation and the exchange of some data (a "hint"). Once the hint has been exchanged, the client can make individual queries much more cheaply. This makes sense for applications like Safe Browsing where the client is likely to make a lot of queries and so you can amortize the hint.

The specific precomputation techniques vary. In some designs, the client and server perform some client-specific precomputation and in others like SimplePIR, the server just does the computation itself and distributes the hint to every client.

Other Designs #

I've focused here specifically on designs that follow this K-O model, largely because they are intuitively easy to explain. There are also designs (for instance Cachin, Micali, and Stadler and Gentry and Ramzan) that are based on other structures and involve sending less data but at increased computation cost. The math here is a lot harder—I only somewhat understand it myself—so I'm not going to try to explain them here.

The Big Picture #

In conclusion, I'd like to make two points here. First, this is a really counterintuitive —at least to me—result: we can allow a client to read some fraction of the server's data without the server learning anything about which values the client wants and in a fashion more efficient than just sending the client all the data. Hopefully, this post gives some intuition for why that's possible, thus rendering it less counterintuitive if not precisely obvious.

Second, PIR is an immensely powerful primitive. There are a whole pile of problems which would be much easier if we had efficient PIR, ranging from Safe Browsing, to messaging interoperability, to authentication for phone calls. We're not yet at the point where you can just drop in PIR the way you would drop in TLS, without really thinking about the cost, but we are getting closer to the point where some of these applications are practical. In fact we may already be there in some cases.

Acknowledgement #

Thanks to Henry Corrigan-Gibbs for assistance with this post. All mistakes are of course mine.

This is the standard leadin to this problem, as seen, for instance, in the Wikipedia article. ↩︎

Indeed, the longer hashes version of Safe Browsing is precisely this. ↩︎

As an aside, it's known that it's not possible to have information theoretic security with a single server. You have to depend on some cryptographic assumption. There are information theoretically secure versions of multi-server PIR, as long as some of the servers are not malicious. ↩︎

Yes, yes, or on an elliptic curve or something. ↩︎

Recall that you have to send two values for each ciphertext. ↩︎

Note: I am using a somewhat different presentation order which I think is easier to understand. ↩︎